Google is preparing a Chrome “Search Companion” as a helpful new way to search the web, using Lens to give additional context about the current page.

9to5Google has a rebooted newsletter that highlights the biggest Google stories with added commentary and other tidbits. Sign up here!

One great way to get more information while browsing the web in Chrome is to right-click an image and choose to “Search Image with Google.” With the help of Google Lens, you’ll usually find out pretty quickly what you’re looking at and gain new resources of where to learn more.

Google is now looking to bring a deeper connection between Lens and Chrome with a new feature called “Search Companion.” When you open the Search Companion, which will take residency in Chrome’s recently launched sidebar menu, the browser will begin sending information about the current page to the companion.

Specifically, Chrome is currently concerned with the web page’s title and the images that are visible on screen, as well as the current set of “trending searches” that Chrome’s address bar can display. The Search Companion feature then sends all of this information to a web app, which is set to be part of Google Lens, based on a “staging URL” found in code. We’ve managed to enable a very early preview of Search Companion, which only shows the data that would normally be sent.

While we don’t know precisely how Google Lens will use this data, the “Search Companion” name makes it pretty clear that it’ll use what’s on your screen to understand the context of your next search.

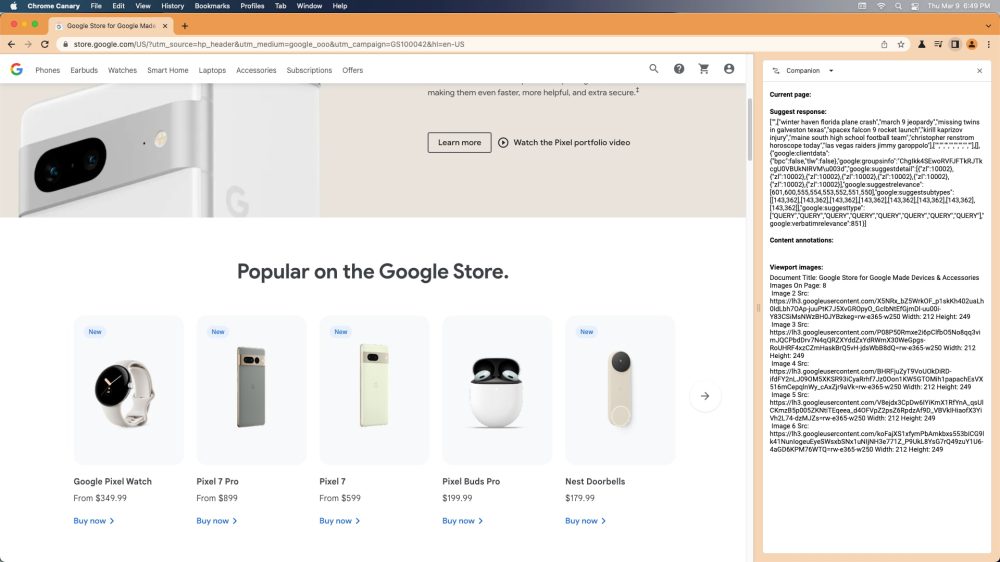

One potential example that comes to mind is being on the product page for the Google Pixel 7, opening the companion and simply searching for “deals.” Using the page title, the tool will know you’re looking for the Pixel 7, and based on what images are on screen, it may even know which color you’re specifically looking for.

In a way, Chrome Search Companion may be quite a bit like the “Multisearch” feature that arrived in Google Lens last year, which allows you to combine an image and text to narrow down your search results. In the example given at the time, you could start by searching for a picture of a dress, then add the word “green” to find similar clothing in a different color.

With Google’s “Bard” chatbot on the horizon and a broader push for AI-powered tools within the company, one may expect that this Chrome Search Companion would also be built on AI tools. However, multisearch in Google Lens is notably not built with the company’s “MUM” (Multitask Unified Model) technology. That being the case, it’s possible that Chrome Search Companion could also be built on traditional tech, but we simply can’t be sure until it launches.

On that note, considering that development has been ongoing since at least November 2022, and that the feature should be arriving soon in chrome://flags, we believe Chrome Search Companion could potentially be unveiled as part of Google I/O in May. In the meantime, we’ll continue keeping an eye on the feature as it develops.

More on Google:

- Report: Google has looked at integrating LaMDA into Assistant since 2020

- Google’s Bard AI could cost 10x as much as traditional keyword search

- Google working to bring Bard AI chat to ChromeOS [Updated]

FTC: We use income earning auto affiliate links. More.

Comments