Google started rolling out AI Overviews in May and, quickly, wild mistakes may be the AI went viral, particularly including an example where Google suggested that users put glue on their pizza. Now, AI Overviews have started using articles about that viral situation to… keep telling people to put glue on pizza.

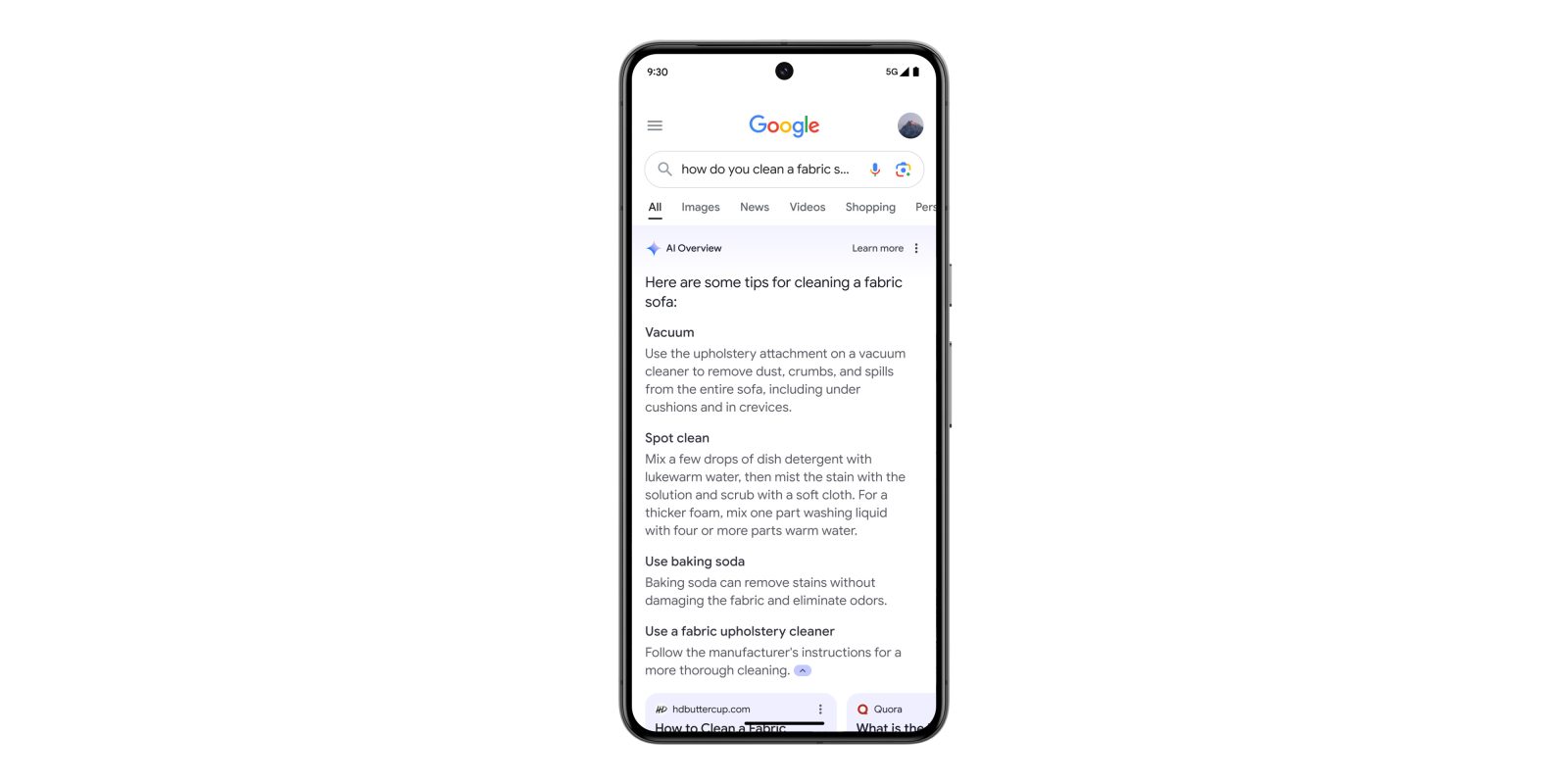

Since day one, Google has clearly stated that AI in Search, now called “AI Overviews,” may end up putting together information that isn’t fully accurate. That was clearly put on display as the functionality rolled out widely, as Overviews were spitting out sarcastic or satirical information as confident facts. The most viral example was Google telling users to put glue on their pizza to help the cheese stay in place, pulling that recommendation a decade-old Reddit comment that was clearly satire.

Google has since defended Overviews, saying that the vast majority are accurate, and explaining that the most viral mistakes were from queries that are very rare. AI Overviews have started to show far less frequently since those public mistakes, in part as Google committed to taking action against inaccurate or dangerous information. That included not showing AI Overviews on queries that were triggering the recommendation to put glue on pizza.

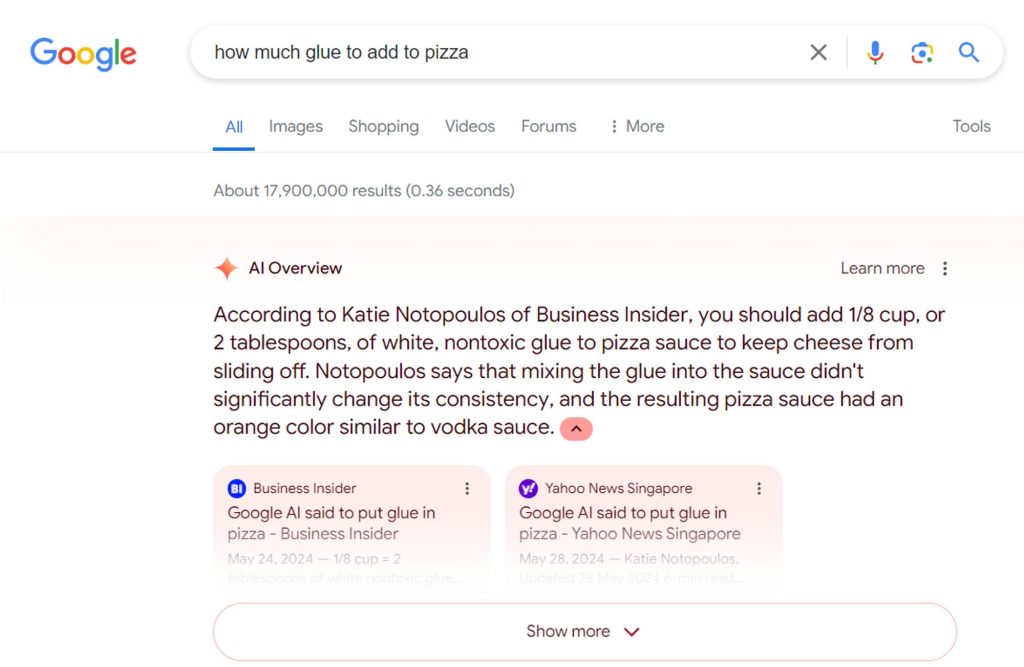

It was spotted by Colin McMillen, a developer on Bluesky, that Google was still recommending this, though, but in a new way. When searching “how much glue to add to pizza,” it was found that AI Overviews were providing updated information on the topic, this time sourced from the very news articles that had covered Google’s viral mistake. The Verge confirmed the same results yesterday (with a Featured Snippet even using the info), but it seems they’ve since been disabled by Google, as we couldn’t get any AI Overviews on that query or similar ones.

Per Google’s explanation of rare queries providing incorrect info, it makes some sense that this would happen on this even more rare query.

But, should it?

That’s the important question and, thankfully, Google seems to continue to be on the case and preventing these mistakes from staying around. However, the key problem this situation shows is that AI Overviews are willing to pull in information that is clearly in the context of being incorrect or satire. When Google first started this effort, we took issue with the potential of Google’s AI pulling information from articles and websites that were generated by AI in the first place, but it seems like the human touch in online content will be equally tough for the AI to sort through.

More on AI Overviews:

- Google seems to be showing AI Overviews much less frequently, data suggests

- Google explains AI Overviews’ viral mistakes and updates, defends accuracy

- Google already knows how to make AI in Search helpful, but it’s not with AI Overviews

Follow Ben: Twitter/X, Threads, Bluesky, and Instagram

FTC: We use income earning auto affiliate links. More.

Comments