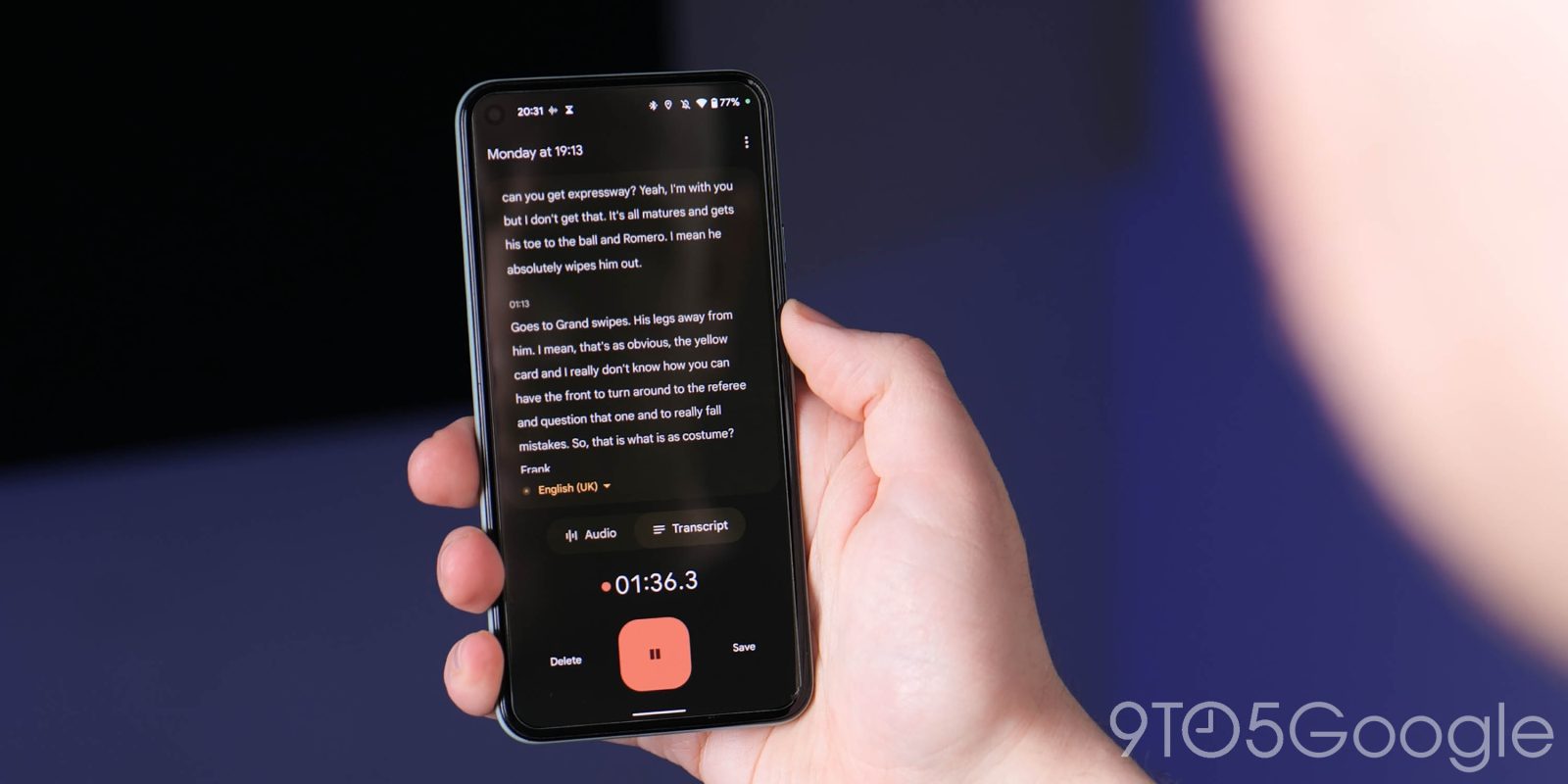

Recorder is one of Google’s best applications of on-device machine learning (ML), exclusive to Pixel phones. The transcription app surprisingly originated inside the Google Creative Lab as the company sets out to build more “Pixel Smarts.”

In addition to capturing audio, Recorder also transcribes spoken words in real-time without requiring an internet connection. This speech-to-text occurs entirely on your Pixel, so it remains private and can be conveniently searched in an instant.

The app can also label sounds like music and even laughter, while a Smart Scrolling feature “marks important sections in the transcript” with keywords for fast browsing. Later updates added shareable clip creation with editing, cloud backup and link-based sharing, and a Material You redesign with tablet-esque landscape mode.

Recorder, of course, takes advantage of machine learning advancements from Google Research, but the app was in large part created by the Google Creative Lab, which describes itself as:

A team of designers, writers, programmers, filmmakers, producers and business thinkers that spend 99.9% of our time making. We are a small team that pushes for an impact that outweighs our footprint. Our job is to help invent Google’s future and communicate Google’s innovations in ways that make them useful to more people.

The Creative Lab covers a lot of ground from advertising — most famously Parisian Love — and branding to products both big and small. The latter includes many one-off tools and experiences often listed on Experiments with Google, while Project Guideline, which helps people with low vision run independently, is a “collaboration across Google Research, Google Creative Lab, and the Accessibility Team.”

In comparison, Recorder, which was also built with the Pixel Essential Apps team, is a bigger undertaking. Creative Lab took a relatively new ML development (all-neural, on-device speech recognizer) and gave it a very practical application, which very much aligns with the group’s mission.

Looking forward, Google is very much looking to make more Pixel experiences in this vein by hiring somebody to lead “Pixel Smarts.” Amusingly, the company later generalized the listing to “Android System Intelligence,” which is the background service powering many ML capabilities but is most feature-rich on Pixel. (The quotes below reference the original job listing.)

As the Director of Product Management for Pixel Smarts, you will focus on creating differentiated experiences on Pixel that leverage our machine learning expertise. This will require a deep, innovative exploration of user needs and technological capabilities. In addition, you will contribute to and lead this exploration, build a product roadmap, and help to build a Product team.

Existing examples include Now Playing’s background detection from the Pixel 2, more battery-efficient automatic speech recognition for Recorder and Live Caption, and on-device Assistant and its Gboard voice typing.

Google wants the Pixel “to lean into advanced machine learning and build out differentiated smart features while taking advantage of unique Pixel hardware and function integration.”

FTC: We use income earning auto affiliate links. More.

Comments