As of late, there’s been increased chatter about AI agents that take a command and physically perform the task, including the needed taps and swipes, on your phone. This talk of building an AI agent reminds me a great deal of the “new Google Assistant” that was announced with the Pixel 4 in 2019.

At I/O 2019, Google first demoed this next-generation Assistant. The premise was that on-device voice processing would make it so that “tapping to operate your phone would almost seem slow.”

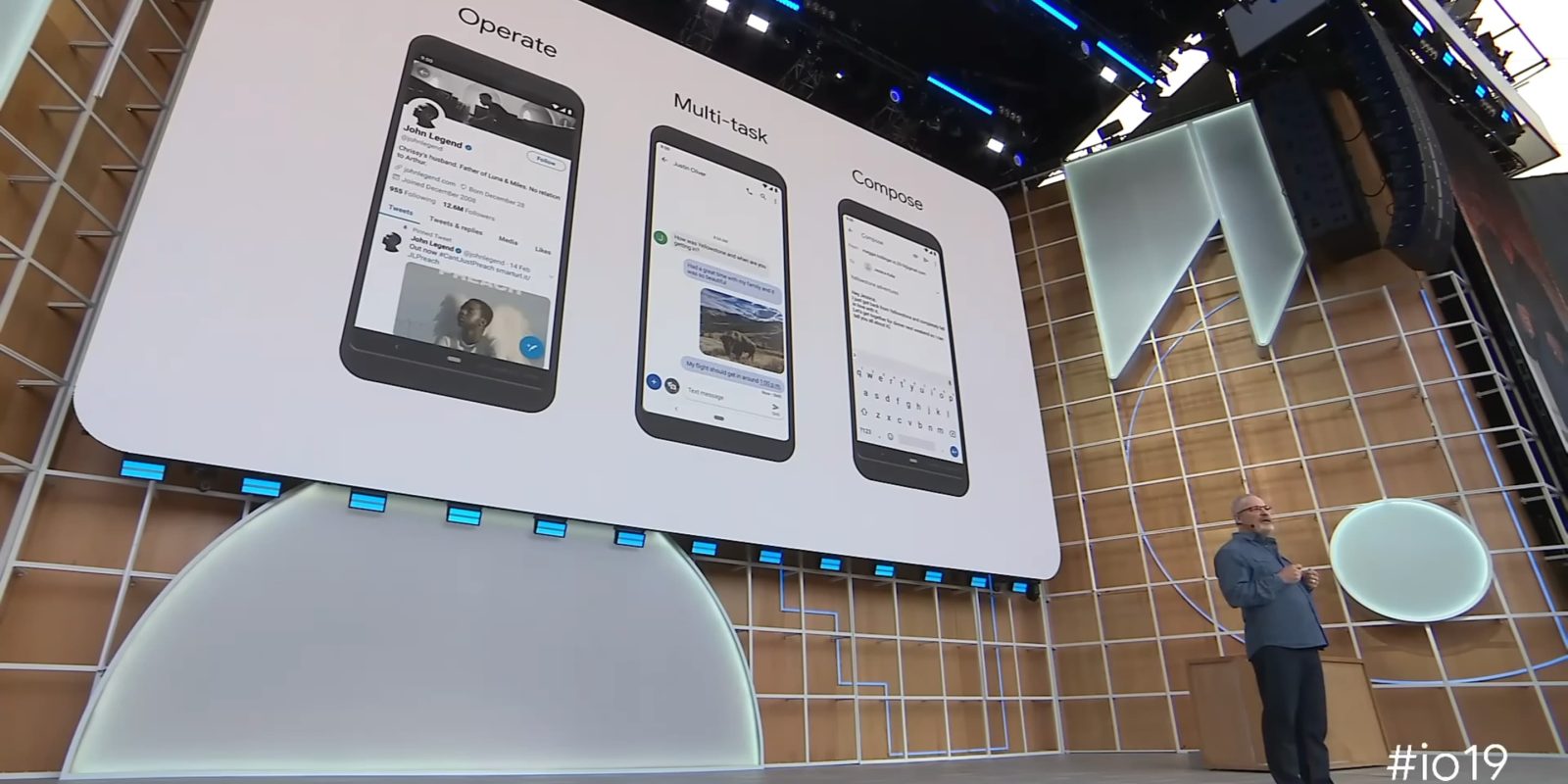

Google demoed simple commands that involve opening and controlling apps, while the more complex idea was “how the Assistant fused into the device could orchestrate tasks across apps.” The example was receiving an incoming text, replying via voice, and then getting the idea to search for and send an accompanying photo. Being able to “Operate” and “Multi-task” was rounded out by a natural language “Compose” capability in Gmail.

This next-generation Assistant will let you instantly operate your phone with your voice, multitask across apps, and complete complex actions, all with nearly zero latency.

The new Assistant launched on the Pixel 4 later that year and has been available on all subsequent Google devices.

- “Take a selfie.” Then say “Share this with Ryan.”

- In a chat thread, say “Reply, I’m on my way.”

- “Search for yoga classes on YouTube.” Then say “Share this with mom.”

- “Show me emails from Michelle on Gmail.”

- With the Google Photos app open, say “Show me New York pictures.” Then say “The ones at Central Park.”

- With a recipe site open on Chrome, say “Search for chocolate brownies with nuts.”

- With a travel app open, say “Hotels in Paris.”

This is the fundamental idea behind AI agents. During an Alphabet earnings call last month, Sundar Pichai was asked about generative AI’s impact on Assistant. He said it would allow Google Assistant to “act more like an agent over time” and “go beyond answers and follow through for users.”

According to The Information this week, OpenAI is working on such a ChatGPT agent:

“Those kinds of requests would trigger the agent to perform the clicks, cursor movements, text typing and other actions humans take as they work with different apps, according to a person with knowledge of the effort.”

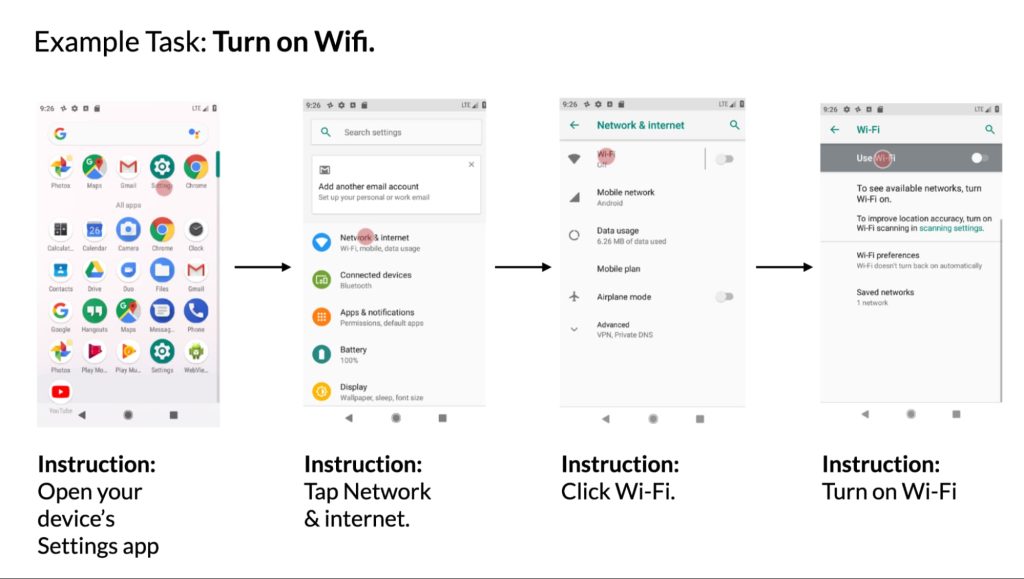

Then there is Rabbit with its large action model (LAM) trained to interact with existing mobile and desktop interfaces to complete a set task.

The version Google Assistant had in 2019 felt very pre-programmed, requiring users stick to certain phrasing rather than letting people speak naturally and then automatically discern the action. At the time, Google said Assistant “works seamlessly with many apps” and that it would “continue to improve app integrations over time.” To our knowledge, that never really happened, while some of the capabilities that Google showed off no longer work as the app has changed. A true agent would be able to adapt instead of relying on set conditions.

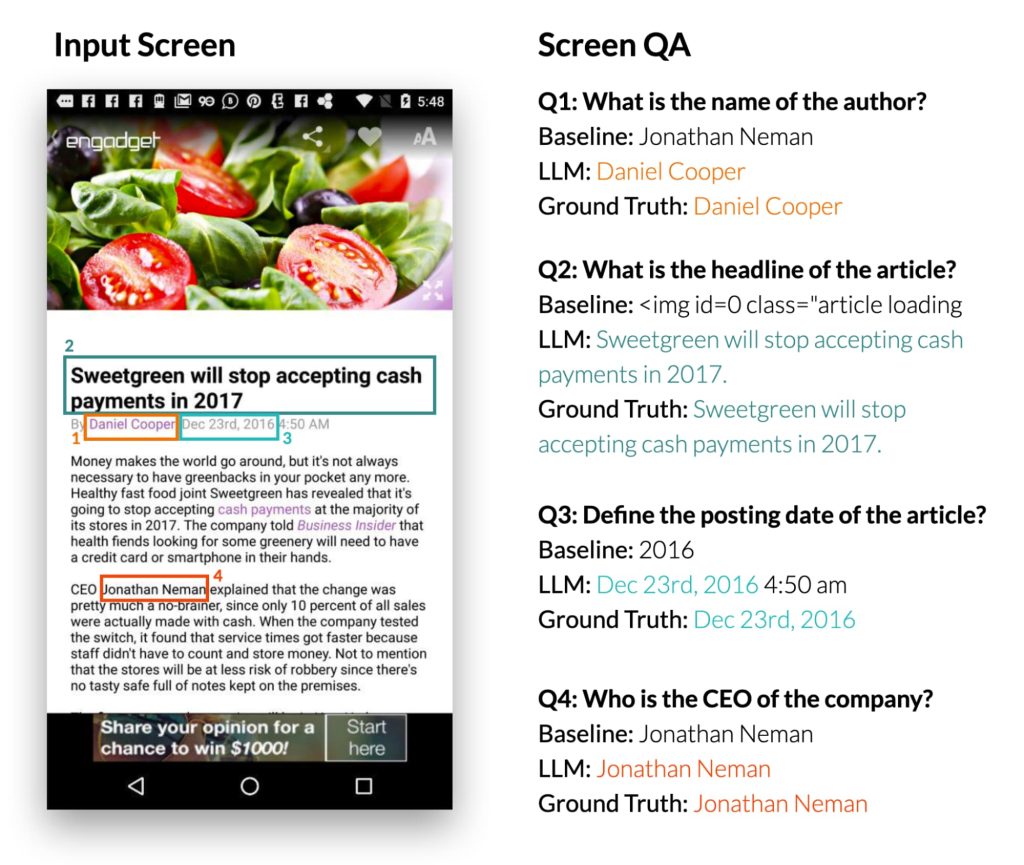

It’s easy to see how LLMs could improve on this, with Google Research last year showing off work on “Enabling Conversational Interaction with Mobile UI using Large Language Models.”

Google Research demonstrated their approach being able to “quickly understand the purpose of a mobile UI”:

Interestingly, we observed LLMs using their prior knowledge to deduce information not presented in the UI when creating summaries. In the example below, the LLM inferred the subway stations belong to the London Tube system, while the input UI does not contain this information.

It can also answer questions about content that appeared in the UI, and control it after being given a natural language instruction.

A Gemini AI agent for your Android device would be the natural evolution of Google’s first attempt, which never really caught on as an all-encompassing Assistant that provided a new way to use your phone. However, features like transcribing a message response and then being able to say “send” live on in Gboard’s Assistant voice typing.

It does seem that the previous effort was a case of Google being too early to an idea, and not having the required technology. Now that it’s here, Google would be wise to prioritize this effort so that it can start leading the field instead of playing catch up.

FTC: We use income earning auto affiliate links. More.

Comments