In announcing Gemini 2.0, Google today shared the latest on Project Astra, while unveiling Project Mariner as an agent that can browse the web for you.

Google says a “new class of agentic experiences” are made possible by Gemini 2.0 Flash’s “native user interface action-capabilities.” It also credits improvements to “multimodal reasoning, long context understanding, complex instruction following and planning, compositional function-calling, native tool use and improved latency.”

All built on Gemini 2.0, these projects/prototypes are still in the “early stages of development,” but “trusted testers” now have access to them and are providing feedback.

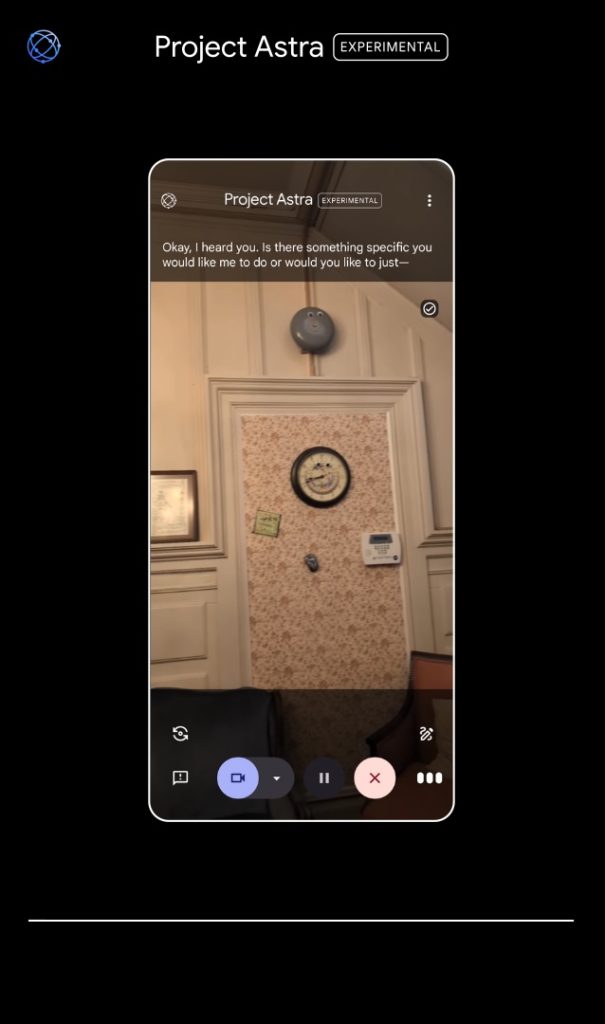

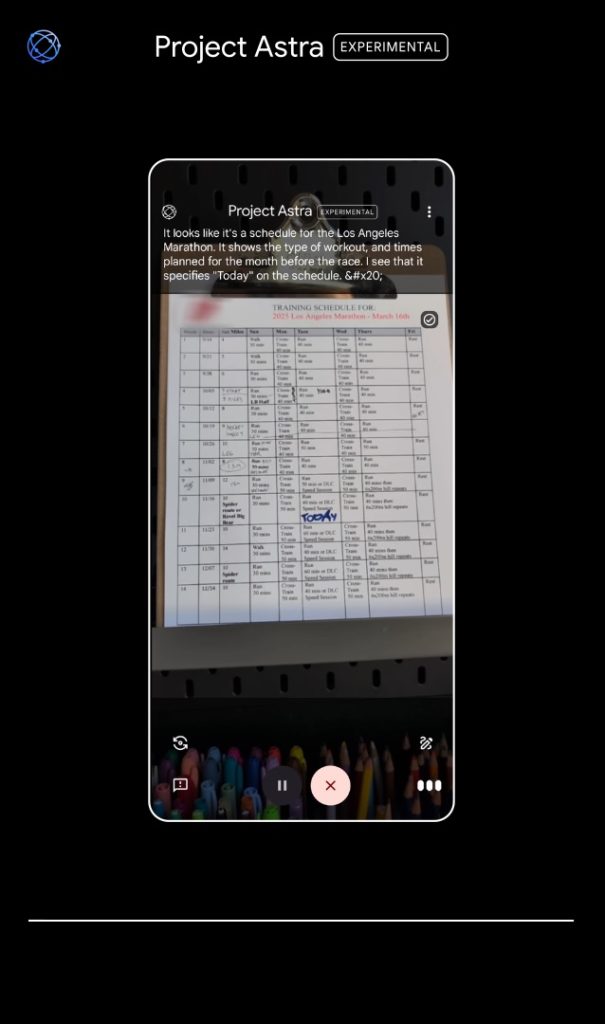

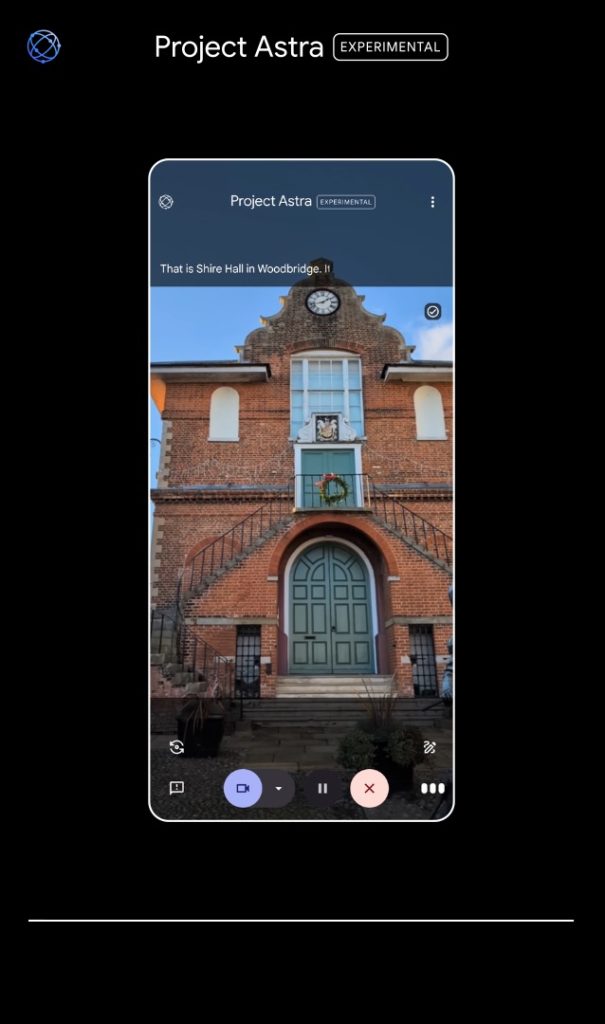

With Gemini 2.0, there are a number of updates to Project Astra — Google’s effort to build an assistant or “universal AI agent that is helpful in everyday life” — since it was shown off at I/O 2024 in May:

- Better dialogue: Astra can now converse in multiple languages and in mixed languages, while better understanding accents and uncommon words.

- New tool use: Astra can use Google Search, Lens, and Maps to help answer your prompts

- Better memory: Astra “now has up to 10 minutes of in-session memory and can remember more conversations you had with it in the past, so it is better personalized to you.“

- Improved latency: Astra can now “understand language at about the latency of human conversation” thanks to native audio understanding and new streaming capabilities

In a demo video that Google shared today, you see a Project Astra Android app with a viewfinder UI and the ability to analyze (screen sharing) what’s on your display, while it remains active as a chathead. This application is just for testing purposes. When Project Astra launches for consumers, it will be through the Gemini (Live) app. Google is also testing Astra on prototype glasses.

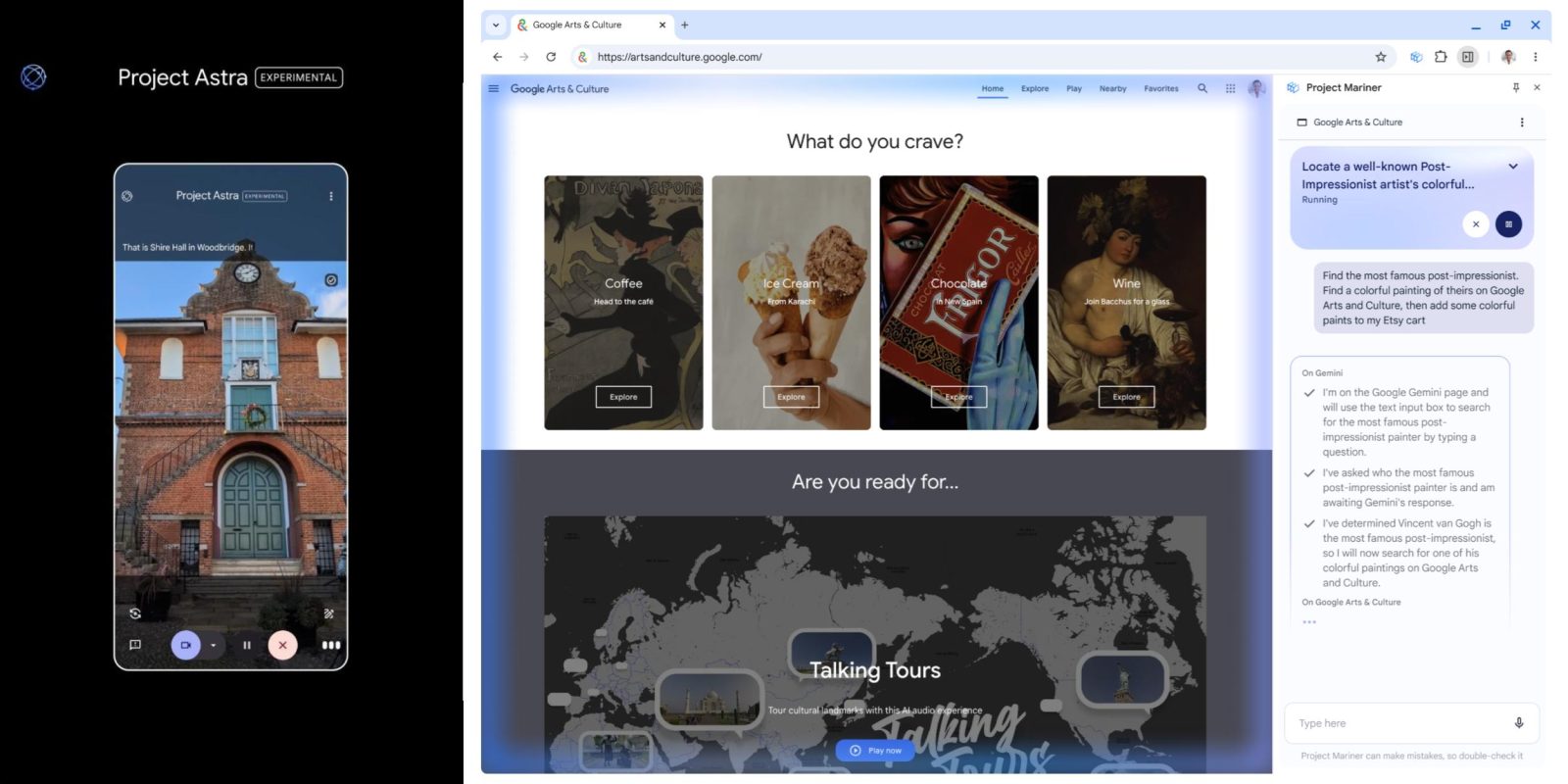

Meanwhile, Project Mariner is an agent that can browse and navigate (type, scroll, or click) the web to perform a broader task specified by the user. Specifically, it can “understand and reason across information in your browser screen, including pixels and web elements like text, code, images and forms.”

At the moment, it exists as a Chrome Extension that makes use of the existing side panel UI. Google demoed a small business use case and for shopping.

When evaluated against the WebVoyager benchmark, which tests agent performance on end-to-end real world web tasks, Project Mariner achieved a state-of-the-art result of 83.5% working as a single agent setup.

On the safety front, Project Mariner can only perform actions in the active browser tab. It will have users confirm “certain sensitive actions, like purchasing something.” It’s also being designed to “identify potentially malicious instructions from external sources and prevent misuse” from fraud and phishing.

Trusted testers are starting to test Project Mariner using an experimental Chrome extension now, and we’re beginning conversations with the web ecosystem in parallel.

Google also discussed an “experimental AI-powered code agent that integrates directly into a GitHub workflow” called Jules.

It can tackle an issue, develop a plan and execute it, all under a developer’s direction and supervision. This effort is part of our long-term goal of building AI agents that are helpful in all domains, including coding.

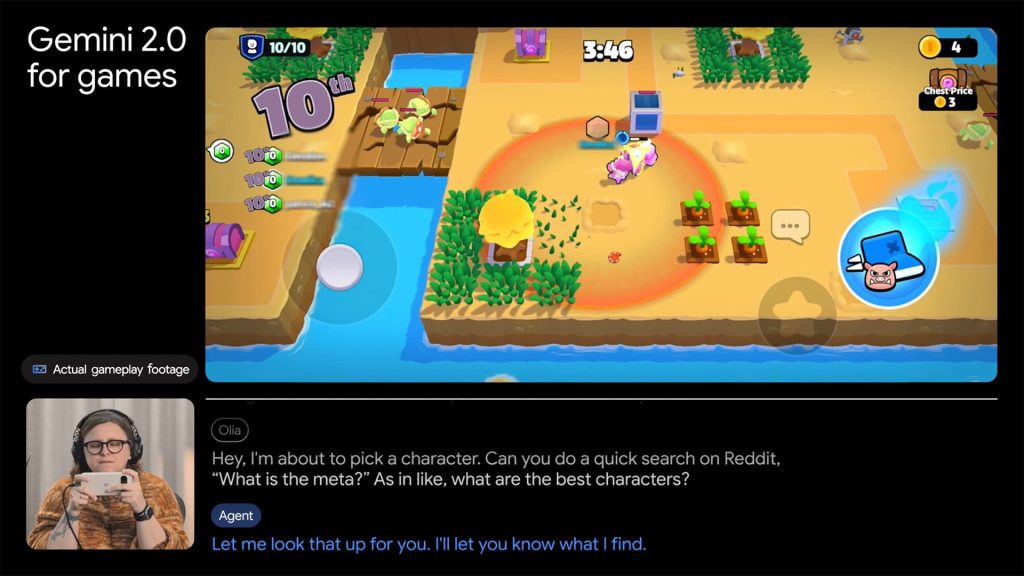

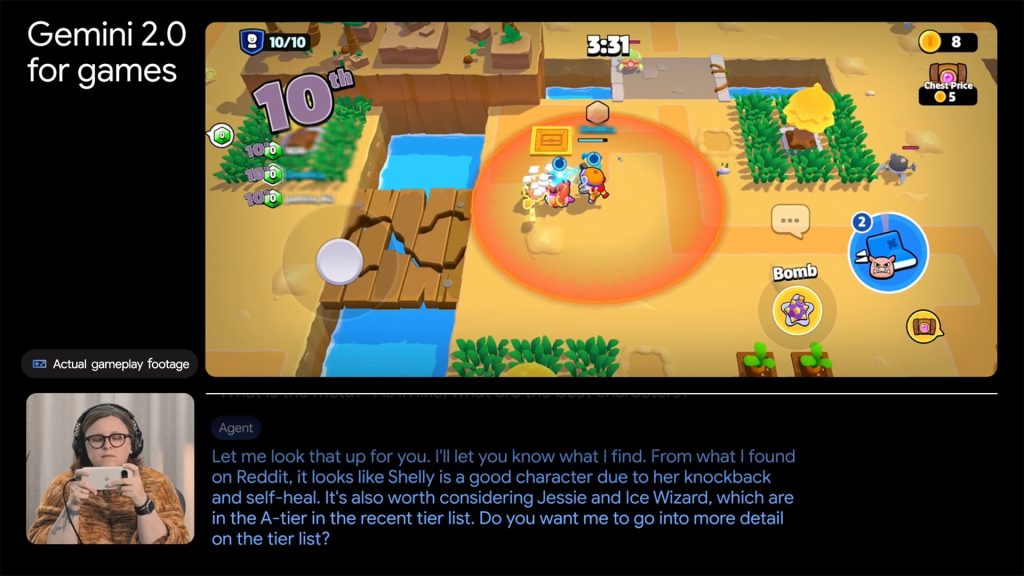

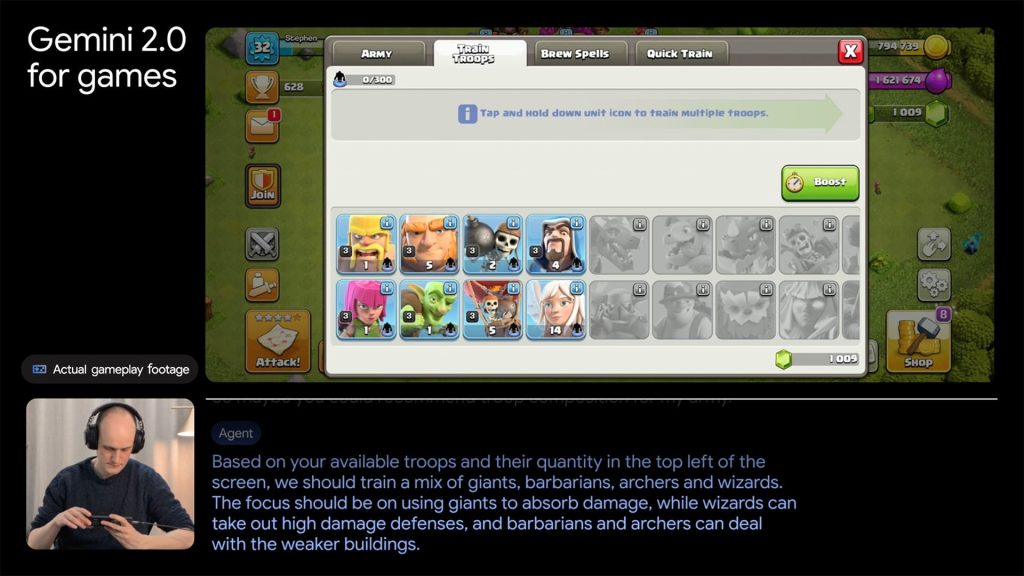

The last prototype is Gemini 2.0 for Games that can serve as a “virtual gaming companion” that sees your mobile phone screen and can answer your questions. It’s being tested with games like Clash of Clans.

It can reason about the game based solely on the action on the screen, and offer up suggestions for what to do next in real time conversation.

FTC: We use income earning auto affiliate links. More.

Comments