ChatGPT took the world by storm last year with its impressive generative AI, but it was a product that was often fraught with factual errors. Today, OpenAI is announcing the next generation with GPT-4, which launches today.

The ChatGPT we’ve come to know over the past few months is powered by GPT-3, an AI model that OpenAI first launched in 2020. OpenAI is now updating that with GPT-4, which is a much more capable model.

The main pitch for GPT-4 is that it is “safer and more useful” in its responses, but it’s also capable of new features.

Like ChatGPT, GPT-4 models can generate text based on a prompt and a backlog of knowledge. OpenAI says the new model is more creative and can do everything from composing songs to learning a user’s writing style. GPT-4 is also capable of more context in the prompt, with up to 25,000 words of input available.

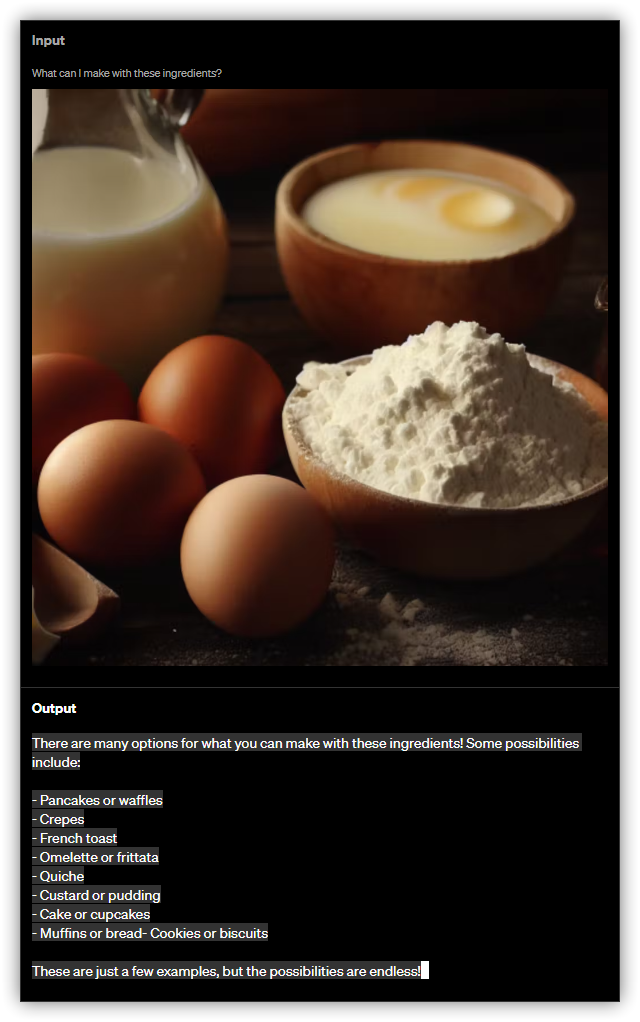

Another neat addition to GPT-4 is the ability to accept images for visual input for prompts. On its website, OpenAI uses the example of an image of a few raw ingredients, with the AI able to spit out a list of possible recipes using those ingredients.

GPT-4 further has better reasoning, with the AI able to better figure out complex questions involving multiple data points. An example with three employees and availability schedules saw ChatGPT incorrectly find free times, where GPT-4 was able to accurately answer the question. In the Uniform Bar Exam, GPT-4 ranked in the 90th percentile while ChatGPT was in the 10th, and GPT-4 was able to reach the 99th percentile in the Biology Olympiad, thanks to image input.

To make GPT-4 more accurate and trustworthy, OpenAI used more human feedback, including feedback from ChatGPT. “Lessons from real-world use of our previous models” were also used to improve the model.

The API for GPT-4 is available on a waitlist, but the model itself is accessible today with a $20/month subscription to “ChatGPT Plus,” also opening the popular chatbot to GPT-4.

ChatGPT Plus subscribers will get GPT-4 access on chat.openai.com with a usage cap. We will adjust the exact usage cap depending on demand and system performance in practice, but we expect to be severely capacity constrained (though we will scale up and optimize over upcoming months).

Duolingo, Be My Eyes, Stripe, Khan Academy, and others are also integrating the model.

Microsoft also confirmed that the “new Bing” chat experience is built on GPT-4.

We are happy to confirm that the new Bing is running on GPT-4, customized for search. If you’ve used the new Bing in preview at any time in the last six weeks, you’ve already had an early look at the power of OpenAI’s latest model. As OpenAI makes updates to GPT-4 and beyond, Bing benefits from those improvements to ensure our users have the most comprehensive copilot features available.

More on AI:

- Gmail, Google Docs, and more are getting ChatGPT-like generative AI features

- Microsoft Bing now has 100 million daily active users, thanks to AI

- Grammarly adding ChatGPT-like AI to create text in your writing style, outlines, and more

FTC: We use income earning auto affiliate links. More.

Comments