Compared to the previous implementation on the Pixel 4 series, Google does not have any traditional, dedicated hardware to power face unlock on the Pixel 6 Pro, which we reported last week was originally planned for the launch – instead, here’s how it might work.

On the Pixel 4 (and the iPhone’s Face ID system), face unlock starts with a flood illuminator shining infrared light at you. A dot projector then projects thousands of small points onto your face. IR camera(s) capture that image and compares it to the face unlock model that was saved during the set-up process.

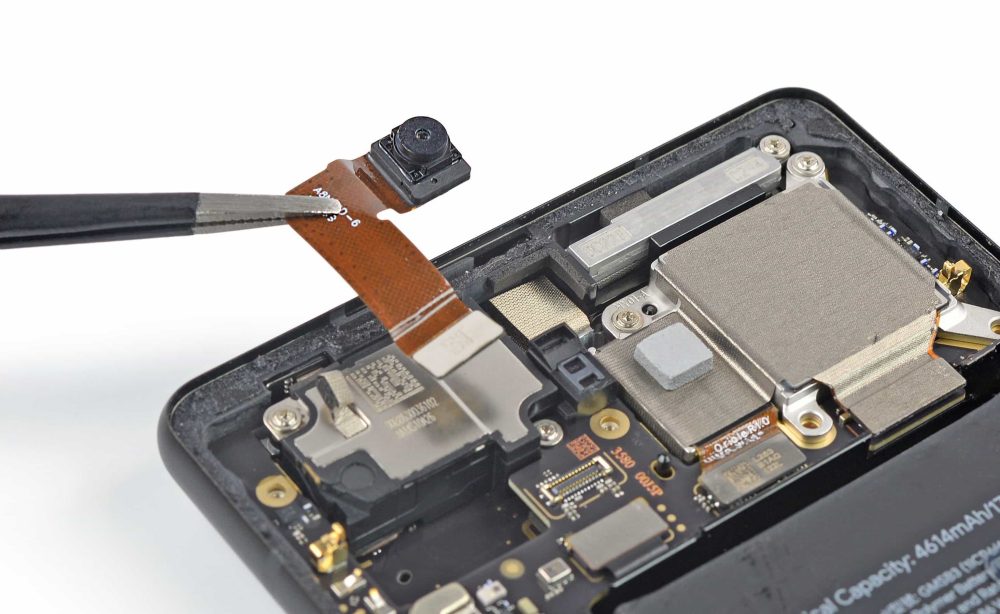

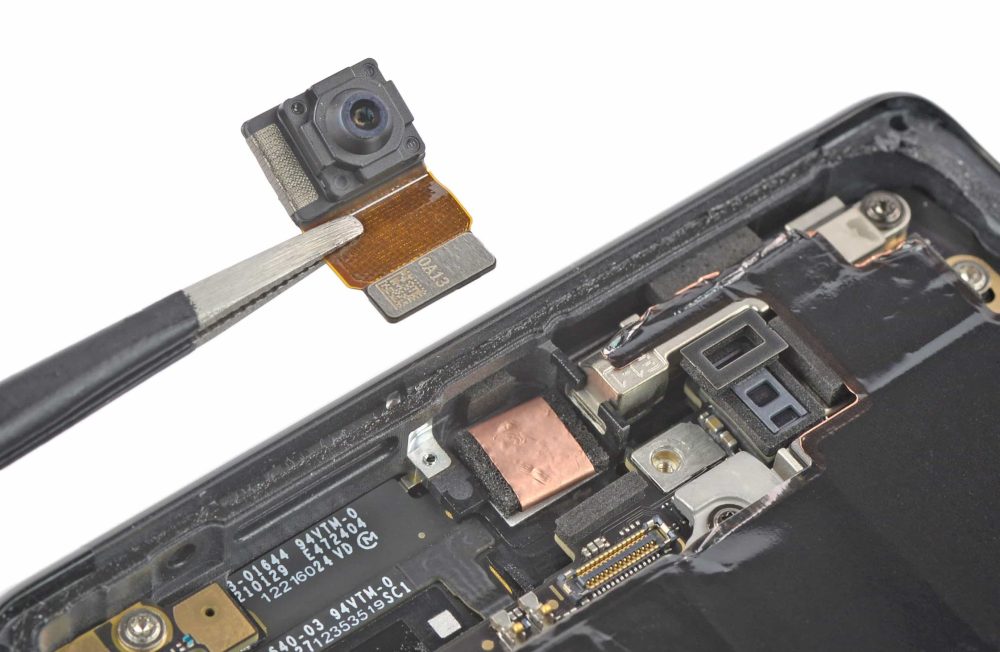

Looking at Google’s current generation of Pixel phones, the front-facing camera on the 6 Pro has a higher/greater megapixel count (11.1 vs. 8MP), field of view (94 vs. 84 degrees), aperture (ƒ/2.2 vs ƒ/2.0), and pixel width (1.22 vs 1.12μm) when compared to that of the regular Pixel 6. However, the most important difference is how the Sony IMX663 found on Google’s larger phone supports dual-pixel auto-focus system (DPAF), while the IMX355 on the smaller model does not.

Interestingly, the Pixel 6 Pro specs page does not mention dual-pixels for the front-facing camera, but we know of its presence due to Asus using the IMX663 on the Zenfone 8.

Google has been using DPAF since the Pixel 2 to generate depth maps for Portrait Mode with just a single lens, including the front-facing camera. Google explained in a blog post from 2017:

If one imagines splitting the (tiny) lens of the phone’s rear-facing camera into two halves, the view of the world as seen through the left side of the lens and the view through the right side are slightly different. These two viewpoints are less than 1mm apart (roughly the diameter of the lens), but they’re different enough to compute stereo and produce a depth map.

A year later on the Pixel 3, Google further improved DPAF-generated depth estimation with machine learning. (The Pixel 4 in 2019 improved Portrait Mode further by leveraging dual-pixels and dual rear cameras.)

A depth map generated by DPAF would be useful for capturing the contours of your face, while Google — from its work with ARCore — also has depth-from-motion algorithms that require just a single RGB camera:

The depth map is created by taking multiple images from different angles and comparing them as you move your phone to estimate the distance to every pixel.

Meanwhile, Google has Tensor for faster ML processing and previously touted how the chip allows (in photography) faster, more accurate face detection while using less power.

These building blocks might be how Google could bring face unlock to the Pixel 6 Pro by leveraging skills that the company has been working on for years between computational photography and machine learning.

More on Pixel 6:

- Pixel 6 is ‘fastest selling Pixel ever’ as Sundar Pichai teases Android, new hardware at I/O 2022

- Google Pixel 6a specs: Tensor chip, 6GB RAM, 12MP camera, and everything else we know so far

- Here’s everything new in Android 13 Beta 1 [Gallery]

Thanks tipster

FTC: We use income earning auto affiliate links. More.

Comments