Back in September, Google previewed the ability to search for nearby places using augmented reality. AR search in Google Maps Live View will start rolling out next week, while Google Lens multisearch will let you take a food picture and see where the dish is available nearby.

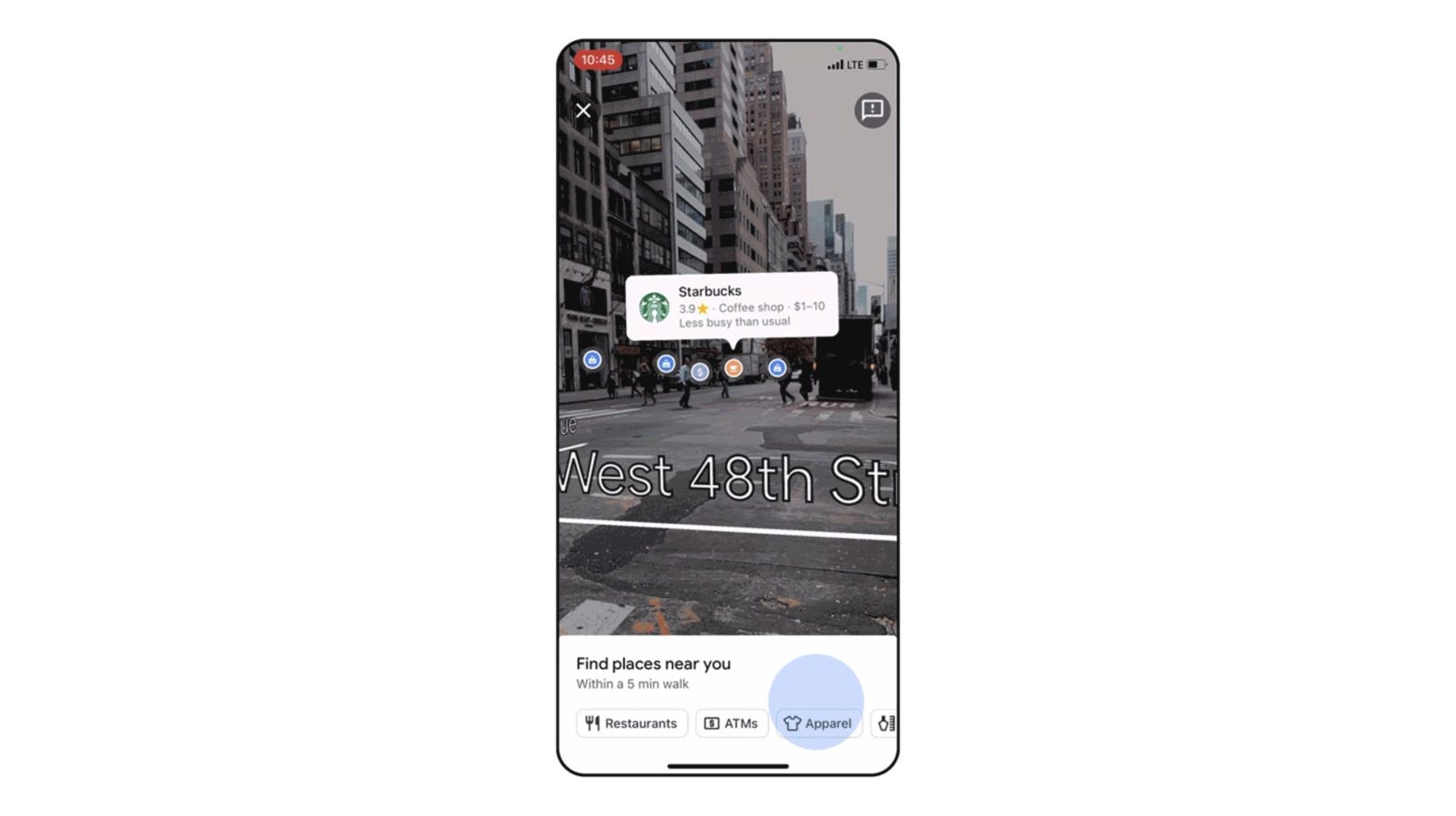

When you launch Live View, which is now possible through the camera icon in the Google Maps search bar, a bottom sheet will let you “Find places near you” that are “within a 5 min walk.” Colorful pins from the flat map view are used to highlight points of interest with your currently centered one automatically having its card appear.

You get the name, icon, rating, category/description, price range, hours, and whether it’s busy. Google will also highlight “Out of view” spots that “aren’t in your immediate view (like a clothing store around the block) to get a true sense of the neighborhood at a glance.”

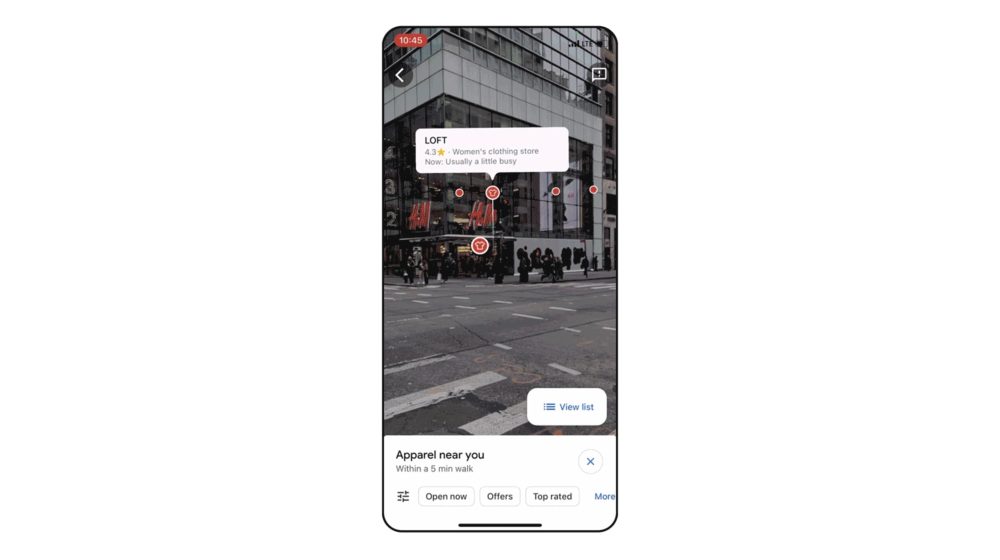

To search, a bottom sheet carousel includes common categories like Restaurants, ATMs, Apparel, and Shopping. You also get filters for Open now, Offers, and Top rated, as well as the ability to get a list view.

After making a selection, matches will be overlayed with arrows and the distance noted on the card. Tapping will start directions. Live View search in Google Maps is rolling out starting next week on Android and iOS in Los Angeles, San Francisco, New York, London, and Paris.

Meanwhile, Google Lens multisearch, which lets you append text queries to analyzed pictures, will support taking a photo or screenshot of food, and then adding “near me” to ” quickly find a place that sells it nearby.” This is launching today in US English.

Google also reiterated that the Lens AR Translate upgrade is rolling out later this year. This method is using the same AI tech behind Pixel Magic Eraser to delete the original text, and then recreate it — while matching the style — to better preserve context than the current color blocks approach, which covers the original text and then overlays text.

On the shopping front, a new AR feature will make it easier to find the right foundation, which is “among the most-searched makeup categories.” To improve accuracy, Google has a new photo library of “148 models representing a diverse spectrum of skin tones, ages, genders, face shapes, ethnicities and skin types.”

Here’s how it works: Search for a foundation shade on Google across a range of prices and brands, like “Armani Luminous Silk Foundation.” You’ll see what that foundation looks like on models with a similar skin tone, including before and after shots, to help you decide which one works best for you. Once you’ve found one you like, just select a retailer to buy.

You’ll also be able to “spin, zoom and see shoes in your space” from brands like Saucony, Vanas, and Merrell by searching for “Shop blue VANS sneakers” and using “View in my space.” These two features start rolling out today.

FTC: We use income earning auto affiliate links. More.

Comments