Over the past few months, ChatGPT has changed the game in many ways, and the next step is with AI-powered chatbot experiences for search. However, those experiences are only as good as the information feeding them, so it’s time for Google to really put the hammer down on “SEO spam.”

Finding information through Google Search, or really any search engine, has become a more difficult task in recent years.

SEO spam has taken over in recent years, as many have spun up or repurposed websites dedicated to putting out content that ranks in search results but falls short when it comes to quality. It’s common when searching for information on popular topics, and even niche ones, that you’ll find a result that seems like it may answer your question, but in reality spews out hundreds of words on the topic without ever providing an answer, or even worse providing a false one.

The rise of websites that pump out post after post of SEO spam has led to dilution in search. It makes finding quality content, or the original source, a difficult task for someone who just wants a factual answer from a trustworthy voice.

Google, to its credit, at least says it has made a significant move in putting down the hammer on SEO spam. Last year, the company revealed its “helpful content” update which told the world that the content in Search that would rank well would be the content that is written from a place of expertise and aimed at answering a question correctly, not with the sole goal of getting the top slot in Search for the sake of ad dollars.

But, in the months since, it’s not really felt like that update has really had a major impact, and it’s still difficult to find answers through Search without spending a ton of time digging.

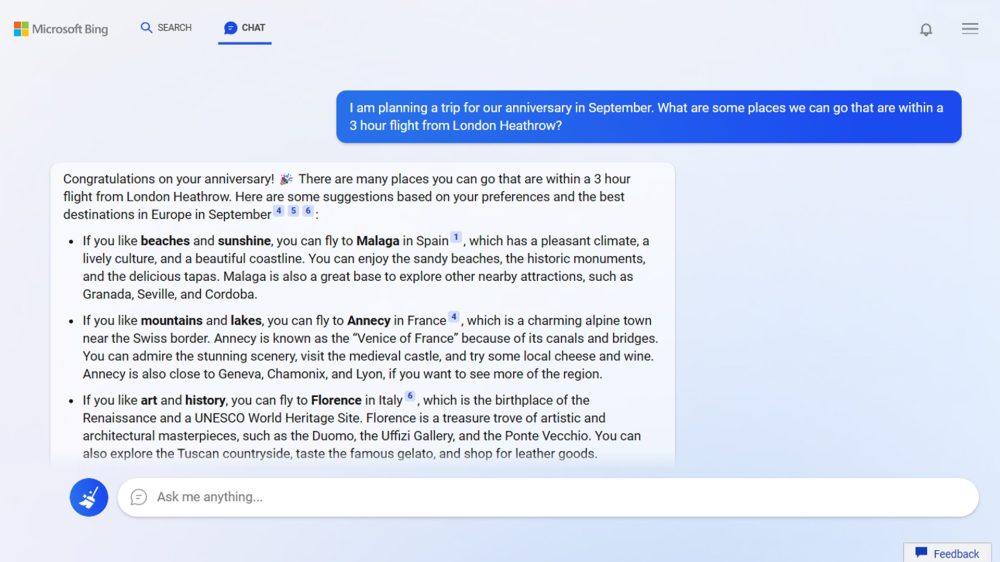

That difficulty is a big reason that “chatbot” search experiences are so appealing. As Microsoft showed off with the “new Bing,” the idea of being able to ask a question and get a straightforward answer with sources cited is genuinely exciting in many ways. And it seems that’s the same goal Google has with “Bard,” which it first revealed recently but has yet to go into great detail on.

But in either case, the information these chatbots can provide is only as good as the information they’re being fed.

With so much dilution on the web created by “SEO spam,” it really wouldn’t be out of the question for an AI to end up pulling information, especially on a more niche subject, that’s just flat-out wrong or outdated. Occasionally that might stem from a misunderstanding among trusted sources – for instance, for months everyone was convinced Fitbit had confirmed it was working on a Wear OS-powered smartwatch, but that was later revealed to just be the company talking about its work with the Pixel Watch. Mistakes like that will always happen, and they’ll surely make their way into these chatbots, but the bigger problem is the errors and misinformation that stem from content on the web that comes from sources that are less concerned with providing accurate information.

And really, there are countless examples to pull from here, but one prominent and recent example that comes to mind is that of CNET.

The long-respected publication was recently revealed to be publishing AI-generated content, which its parent company Red Ventures pushed for to fill out articles about finances and credit cards. The goal was clear, in flooding Google Search with as much content about the subject as possible for as little cost as possible, as an exposé by The Verge points out. But the flaw was that, once these AI articles were discovered, factual errors in those posts were also discovered. CNET itself confirmed finding errors in 41 out of the 77 AI-generated posts, some of which were phrased in strong language that a reader wouldn’t really give a second thought unless they knew the answer was incorrect.

Responding to questions about CNET’s practice, Google’s Public Liaison for Search said that AI content like that wouldn’t necessarily be penalized in Search, as long as it was still “helpful.” As Futurism pointed out, this only fueled the fire for “SEO spam” to come from AI-generated content from less-than-reputable actors.

Let’s imagine these SEO spam posts make their way to the web and bring incorrect details, and then the AI swoops them up and spits them back out as an answer to a user’s query.

In today’s search, a page of results can show a long list of links, which encourages people to dig through and find the answer to their question from multiple sources and come to a consensus. It’s not always convenient, but with so much misinformation out there, it is important. And with that system, the odds are fairly strong that the truth can win out.

But with a chatbot interface that spits out an answer, much of that context could be lost. It’s hard enough to find an accurate answer when you’re looking at a list of links, now imagine there’s a wall of questions and responses from the AI chatbot in between you and the source information, with nothing but a tiny list of links at the bottom to show you where the AI is getting its information. At its best, that encourages laziness, and at worst it could spread misinformation like wildfire.

All of this really presents a potential “perfect storm” for misinformation being further given a spot light, but with even less chance of the average Joe being able to know something is incorrect. If we can’t tell the difference now, when we’re seeing the sources fairly straightforwardly, how will anyone be able to tell when the sources are hidden under an interface that focuses on showing mainly your queries and the AI’s responses?

An argument against this might be that these chatbots are showing their sources, or even displaying everything side-by-side. But, let’s be honest here. In today’s society, the vast majority of folks are going to go for the easy answer over doing their own research, even if Microsoft directly tells them they should still check sources.

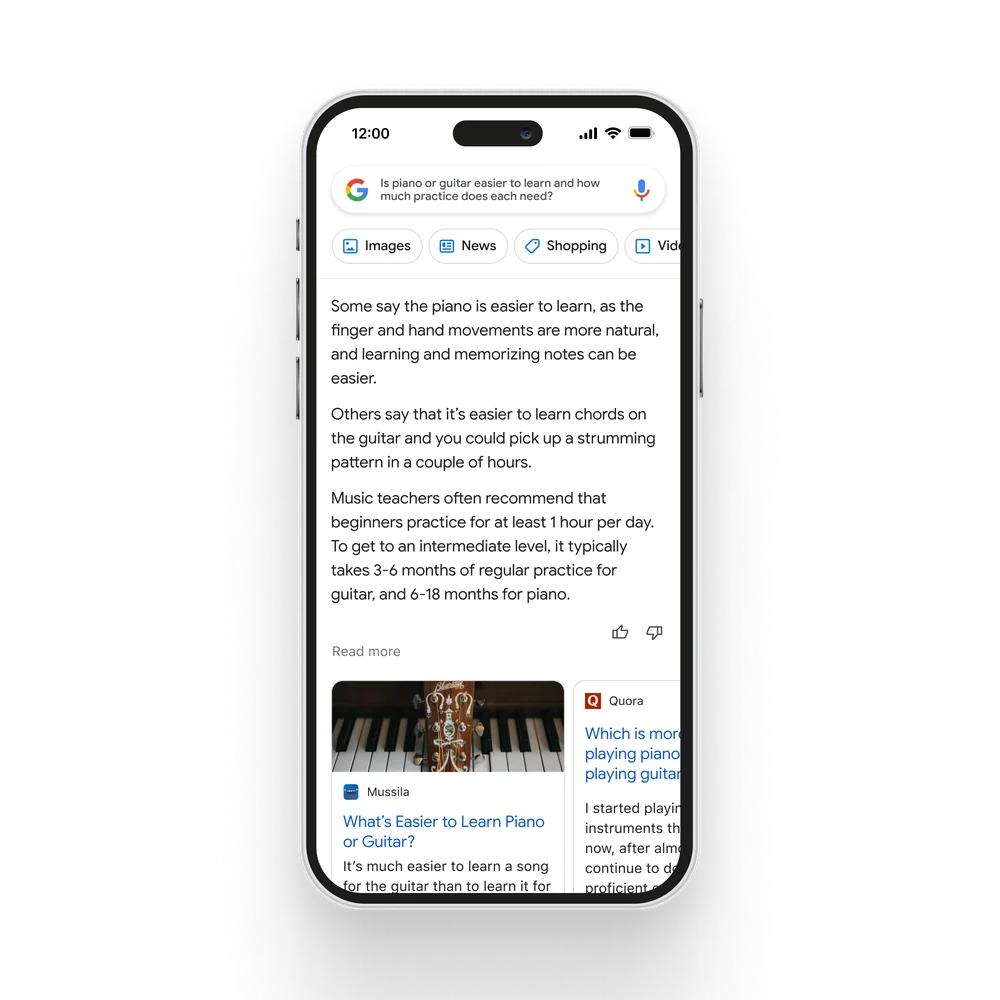

Looking at Google’s Bard, we still don’t know much about how the “final product” will look, where Microsoft has a product that’s already shipping, so it’s unclear how Google intends to cite its sources. With “AI Insights” in Search, as seen below, Google shows a “read more” section with prominent links, but those aren’t necessarily cited as sources. But with plenty of time before this experience reaches the public, hopefully Google will figure out how to get this right.

If Google doesn’t do something to punish the bad actors focused solely on getting a buck from a high-ranking article over factual reporting, it’s just going to be a loss for everyone.

And the company needs to do something soon. While this new AI interface has the potential to starve out SEO spam websites by lessening the traffic they receive, projects like “Bard” can only survive if they’re trustworthy, and that only works if Google is stronger against these sorts of plays sooner rather than later.

More on Google & AI:

- Microsoft announces ‘new Bing’ as ‘AI-powered answer engine’

- Google announces Bard, powered by LaMDA, to compete with ChatGPT

- Google Search seemingly won’t penalize AI-generated articles as long as they’re still ‘useful’

FTC: We use income earning auto affiliate links. More.

Comments