Google Arts & Culture has a new “Calling In Our Corals” project bubbling up to the surface. Using data models defined by users, teams will be able to monitor and understand thriving ecosystems in our oceans, with the main goal of repairing those fragile habitats.

Trained AI can go a lot further than simply being a conversation stand-in for an intelligent human. In some cases, AI can be put to use doing rather rewarding work. One such project is the new initiative set forth by Google Arts & Culture to use AI to protect and maintain coral reefs and other marine life. Most are aware that coral reefs around the world are suffering, as rather depressing before and after images are pretty easy to find online.

Setting out to change that, Google is now using AI models to sift through hours of audio recordings of coral reefs taken in 10 diverse countries. Prior to letting that AI model take the reigns, Google is turning some heavy lifting over to users in a new experiment named “Calling in Our Corals.”

Through the Google Arts & Culture website, users will be able to listen to high-quality audio recordings of coral reef environments, which will eventually be used to train AI. These clips are filled with incredibly detailed sounds, such as different species of fish and shrimp making sounds that normally go unheard.

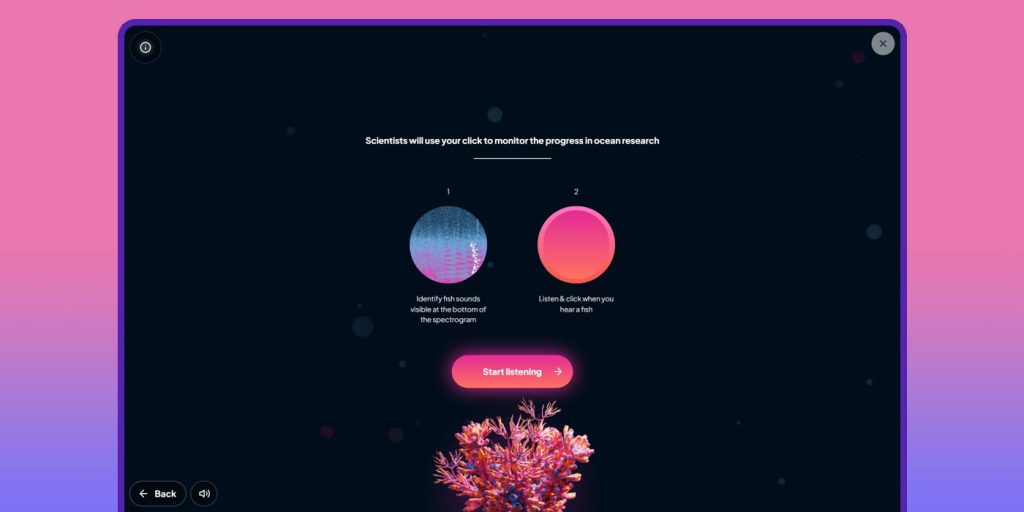

Paired with the audio is a spectrogram that visually represents the audio signature of that coral reef. Whenever sea life makes noises, there’s a heavy chance that the sound will be visible in the spectrogram. Each signature is unique, with whales being represented by thick lines and shrimp sounds looking thin and fast. As the listener, your job in the Arts & Culture site is to pinpoint locations in the audio recording where the noise was made.

The idea behind the project is to effectively crowdsource audio breakdowns from humans. Over time, human input paired with visual readings from the Arts & Culture coral reef audio clips will be used to train an AI model that will do the work automatically. Google notes that there are hundreds of hours of recordings. Turning it over to the public will allow the team to break down this data faster. In the future, an AI taking the project on and sifting through the data will go even quicker.

The project itself can be found on the Google Arts & Culture website under the Experiments section. All you need to do is sit down, relax, and listen to the intricate sounds of coral reefs. Through each audio clip, you’ll simply have to click a button to indicate you heard something. This surprisingly meditative project is a neat way to play a small part in repairing the world’s oceans.

FTC: We use income earning auto affiliate links. More.

Comments