Following the tease at the Pixel Watch 2 launch in October, Google Health provided an update on Fitbit Labs at The Checkup 2024 event.

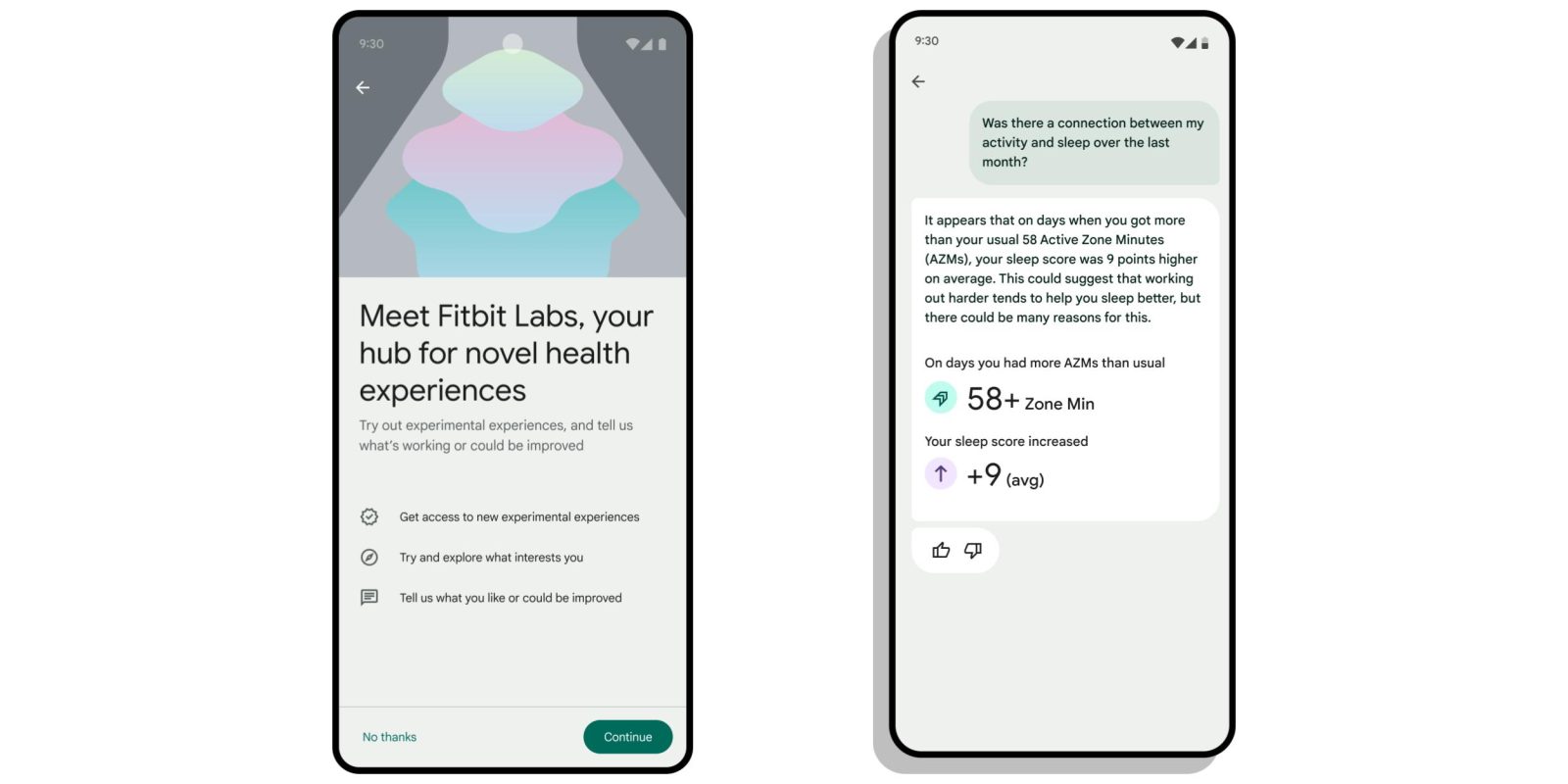

Fitbit Labs will let Fitbit Premium users test and give feedback on experimental AI features. One such capability is essentially a chatbot that lets you ask questions about your Fitbit data in a natural and conversational manner. The overall aim is to generate “actionable messages and guidance,” with responses including charts that “help you understand your own data better.”

This model is being fine-tuned to deliver personalized coaching capabilities, like actionable messages and guidance, that can be individualized based on personal health and fitness goals.

- “For example, you could dig deeper into how many active zone minutes (AZMs) you get and the correlation with how restorative your sleep is.”

- “…this model may be able to analyze variations in your sleep patterns and sleep quality, and then suggest recommendations on how you might change the intensity of your workout based on those insights.”

This is powered by a new Personal Health Large Language Model from Fitbit and Google Research. It’s built on Gemini models and “fine-tuned on a de-identified, diverse set of health signals from high-quality research case studies.” This model will “power future AI features across [Google’s] portfolio.”

The studies are being collected and validated in partnership with accredited coaches and wellness experts, enabling the model to exhibit profound reasoning capabilities on physiological and behavioral data. For example, we’re testing performance using sleep medicine certification exam-like practice tests, and are already seeing that our model currently performs well.

Fitbit Labs will be available “later this year” for a “limited number of Android users who are enrolled in the Fitbit Labs program in the Fitbit mobile app.”

Meanwhile, Google Search is adding more visual search information, especially on mobile, for health conditions, like migraines, kidney stones, or pneumonia. This includes images and diagrams from high-quality sources to help you better understand symptoms.

This joins how you can use Google Lens to photograph skin conditions. Launched last year, this is now available in over 150 countries. YouTube is also working to translate medical content with Google’s Aloud AI-powered dubbing tool.

Elsewhere, Google is researching fine-tuning a version of Gemini for the medical domain.

And because our Gemini models are multimodal, we were able to apply this fine-tuned model to other clinical benchmarks — including answering questions about chest X-ray images and genomics information. We’re also seeing promising results from our fine-tuned models on complex tasks such as report generation for 2D images like X-rays, as well as 3D images like brain CT scans – representing a step-change in our medical AI capabilities.

FTC: We use income earning auto affiliate links. More.

Comments