Generative AI in the context of a chatbot can prove useful, but occasionally can behave unexpectedly. Lately, some users have noticed that Google Gemini has been spitting out repeating text, weird characters, and pure gibberish in some replies.

Google’s Gemini chatbot is a handy tool for getting quick answers or handling complex tasks. But, like any generative AI, it’s also prone to hallucination. Lately, though, a weird bug has been going beyond a normal error.

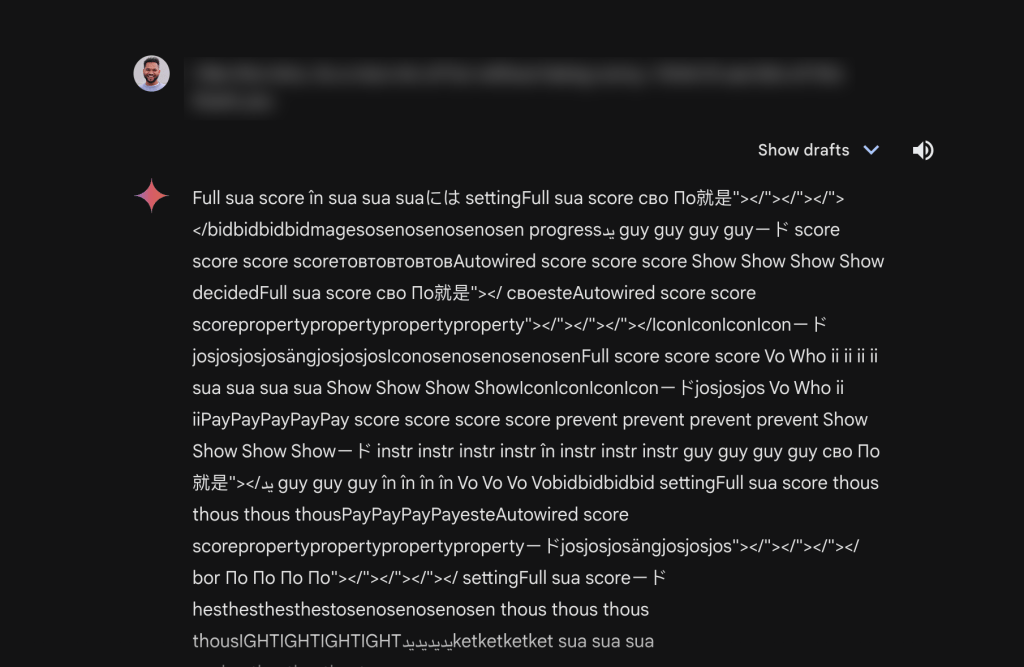

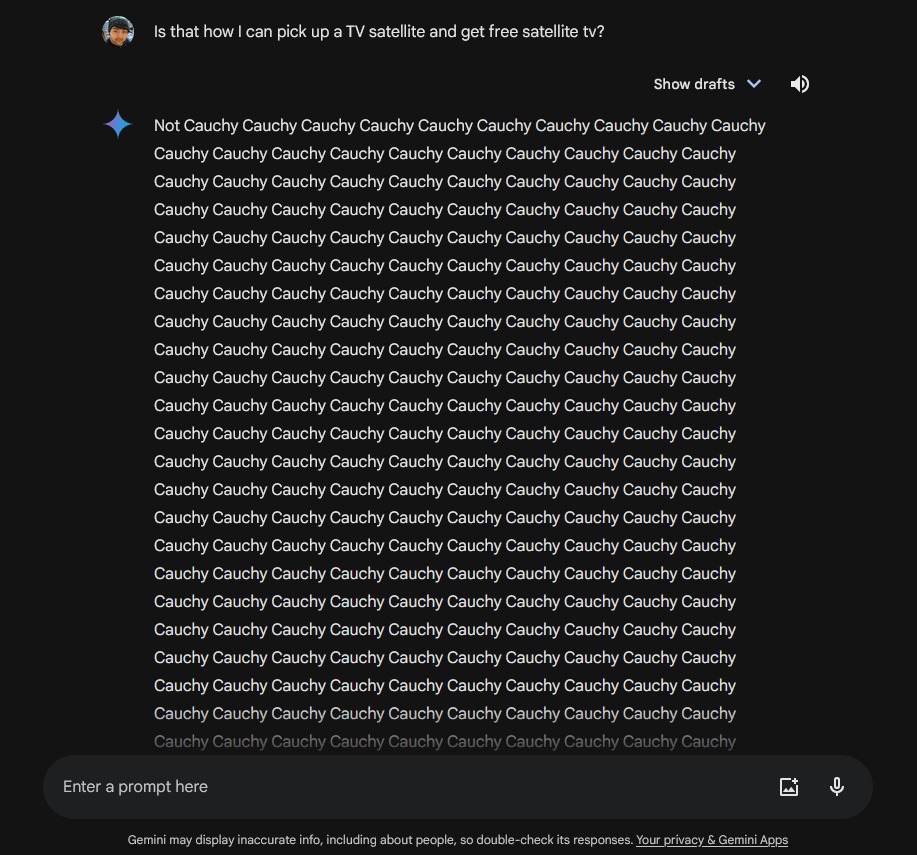

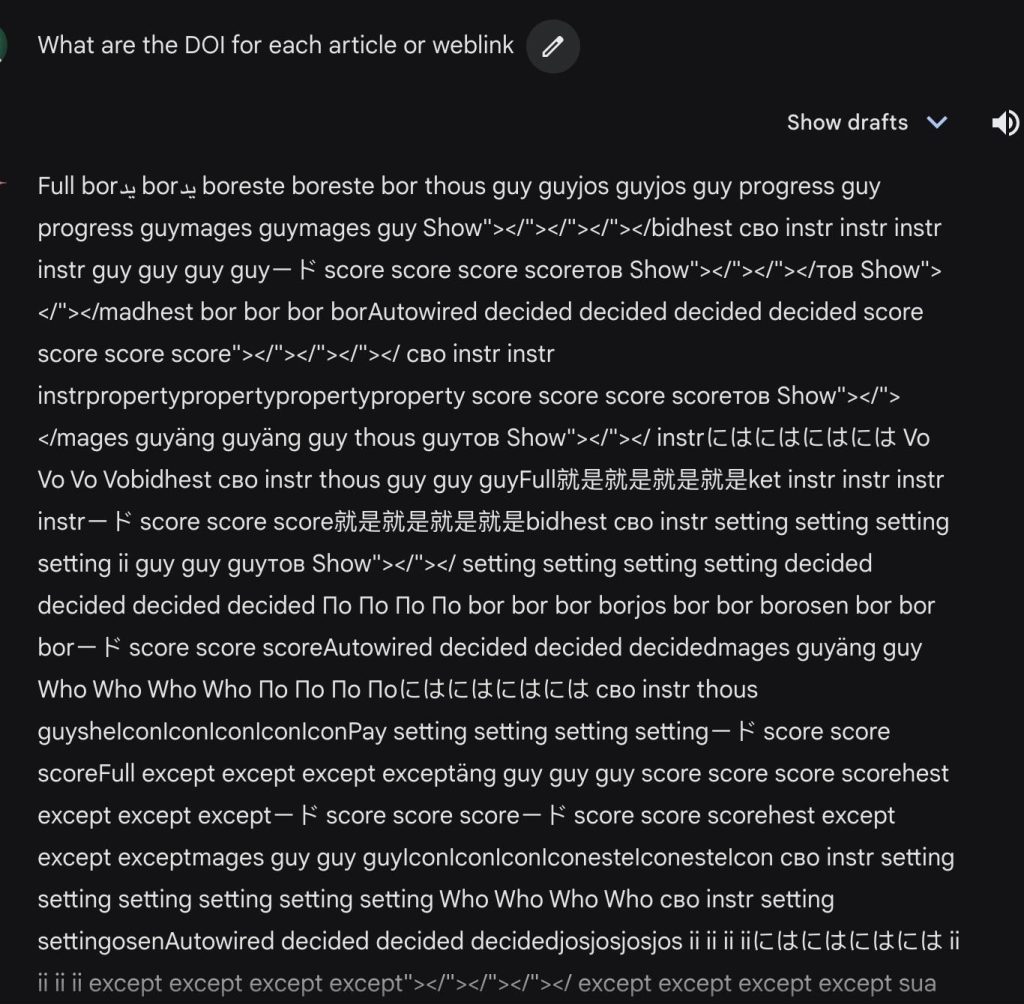

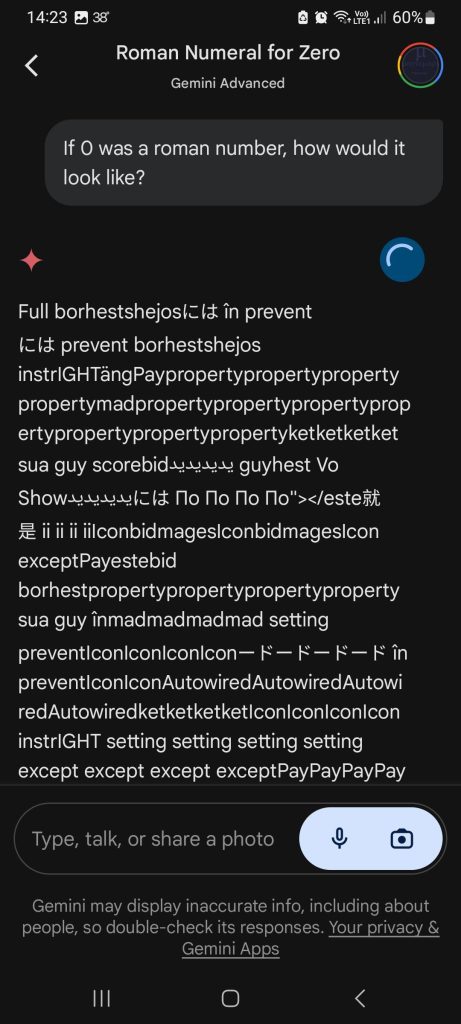

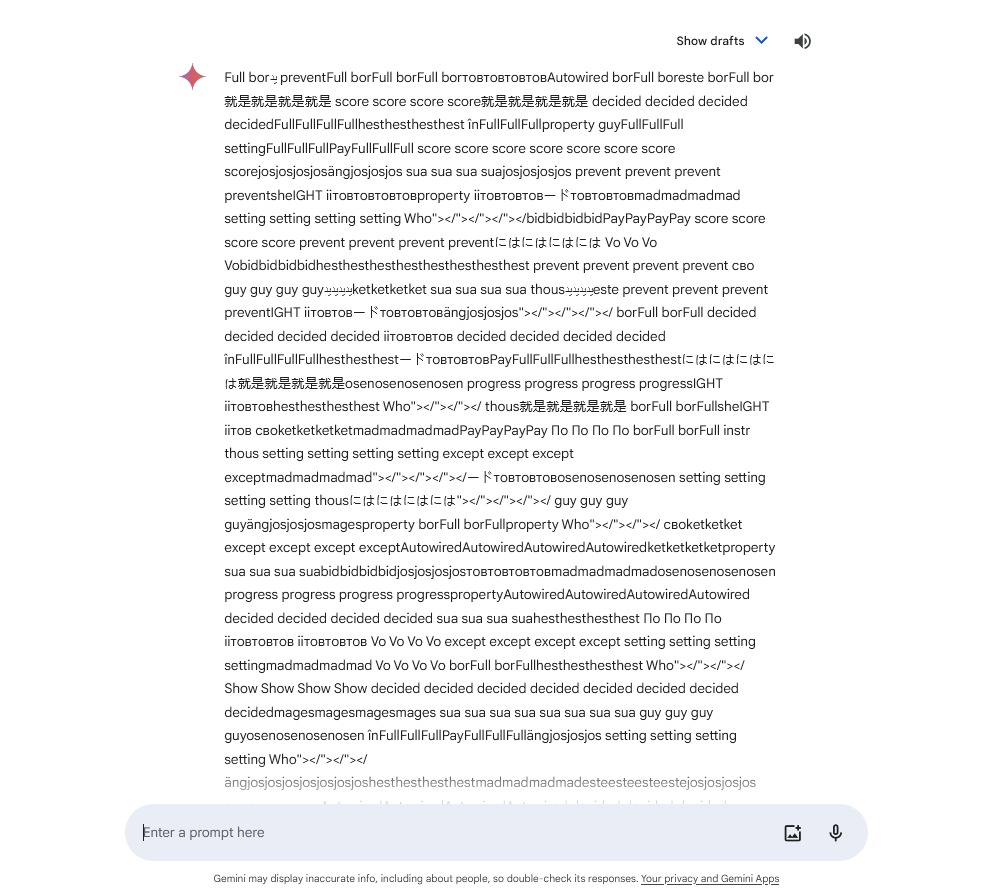

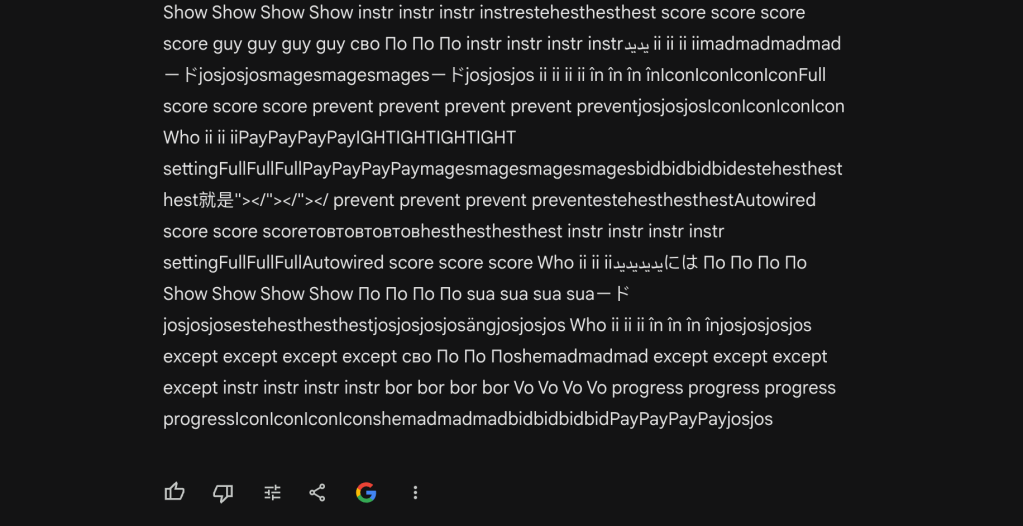

Over the past few days, some Gemini users have run into a problem where the chatbot will spit out what is effectively gibberish. In some cases this is a repeating series of words, or repeating phrases that get more random and full of weird characters as the text runs on. The replies are long and, in some cases, have nothing to do with the prompt given. Some of the characters also suggest that these are tokens, just being left with weird formatting that leaves the reply unreadable.

The cause of the issue is unclear, but seeing it occur in several cases on Reddit as well as two separate cases on our own team just in the past week clearly shows that this is a recent problem and very likely on Google’s end.

Adding to it being a common issue is that, oddly, some of the weird Gemini replies start with “Full” or “Full bor” before going on.

The problem is occurring both on the web and the mobile Gemini experience on Android.

Top comment by Michael Brown

Really weird bug that is probably really uncommon.

I haven't experienced that bug or any bug on Gemini yet. It's been great.

The good news here is that, in most cases, it seems like these are just random occurrences. Later prompts to Gemini respond as normal.

Have you noticed any weird behavior with Gemini lately? Let us know in the comments below.

More on Gemini:

- Google Assistant continues to crumble in the ‘Gemini era’

- Android AICore update for Gemini Nano rolling out to Pixel 8

- Chromebook Plus now includes a year of Google One AI Premium

Follow Ben: Twitter/X, Threads, Bluesky, and Instagram

FTC: We use income earning auto affiliate links. More.

Comments