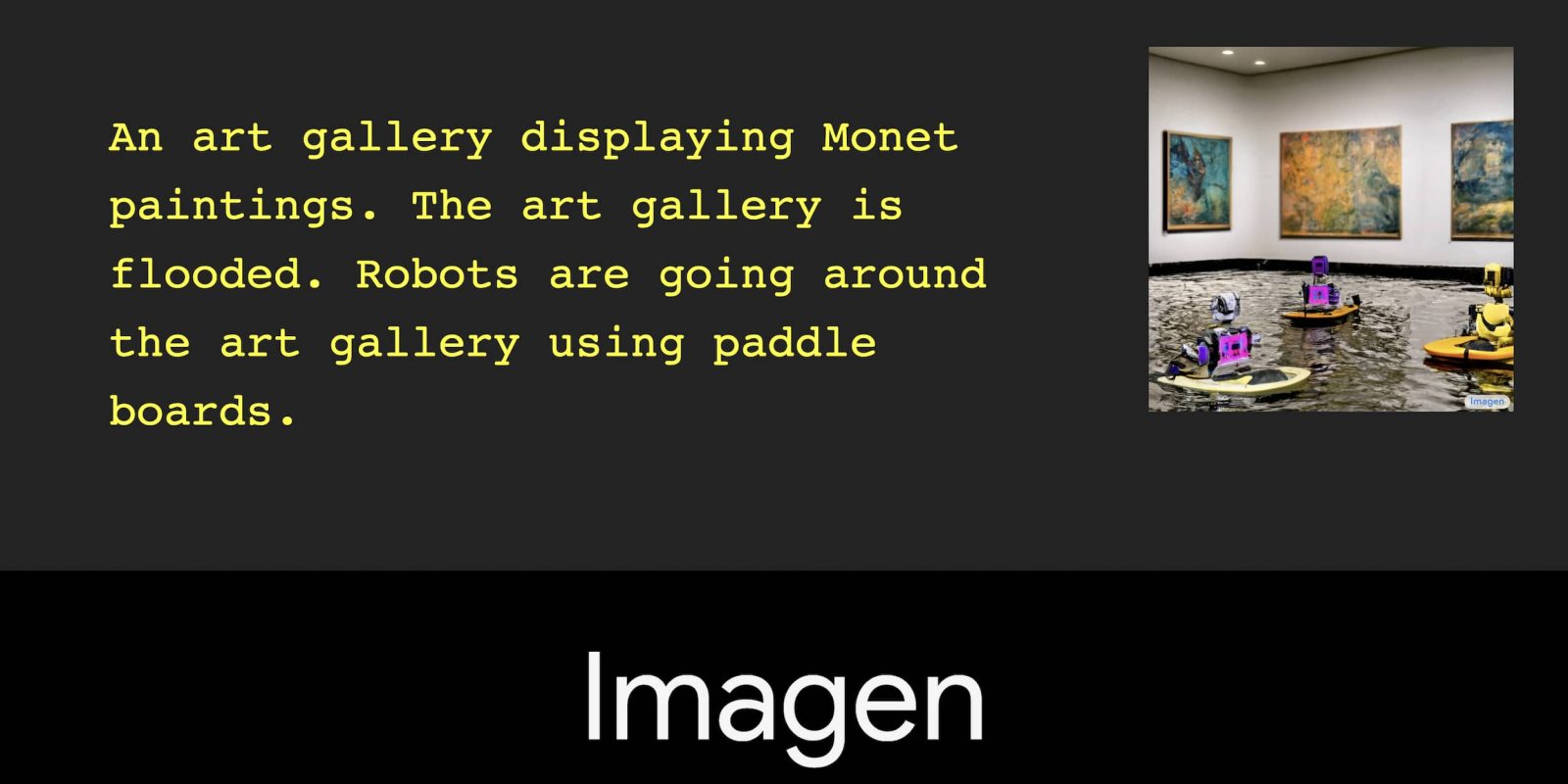

In recent weeks, the DALL-E 2 AI image generator has been making the waves on Twitter. Google this evening publicized its own version called “Imagen,” and it pairs a deep level of language understanding with an “unprecedented degree of photorealism.”

According to Google AI lead Jeff Dean, AI systems like these “can unlock joint human/computer creativity,” and Imagen is “one direction [the company is] pursuing.” The advancement made by the Google Research, Brain Team on its text-to-image diffusion model is the level of realism. In general, DALL-E 2 is mostly realistic with its output but a deeper look might reveal the artistic licenses made. (For more, be sure to watch this video explainer.)

Imagen builds on the power of large transformer language models in understanding text and hinges on the strength of diffusion models in high-fidelity image generation. Our key discovery is that generic large language models (e.g. T5), pretrained on text-only corpora, are surprisingly effective at encoding text for image synthesis: increasing the size of the language model in Imagen boosts both sample fidelity and image-text alignment much more than increasing the size of the image diffusion model.

To prove this advancement, Google created a benchmark for assessing text-to-image models called DrawBench. Human raters preferred “Imagen over other models in side-by-side comparisons, both in terms of sample quality and image-text alignment.” It was compared to VQ-GAN+CLIP, Latent Diffusion Models, and DALL-E 2.

Meanwhile, metrics used to prove that Imagen is better at understanding users requests include spatial relations, long-form text, rare words, and challenging prompts. Another advancement made is on a new Efficient U-Net architecture that is “more compute efficient, more memory efficient, and converges faster.”

Imagen achieves a new state-of-the-art FID score of 7.27 on the COCO dataset, without ever training on COCO, and human raters find Imagen samples to be on par with the COCO data itself in image-text alignment.

On the societal impact front, Google “decided not to release code or a public demo” of Imagen at this time given the possible misuse. Additionally:

Imagen relies on text encoders trained on uncurated web-scale data, and thus inherits the social biases and limitations of large language models. As such, there is a risk that Imagen has encoded harmful stereotypes and representations, which guides our decision to not release Imagen for public use without further safeguards in place.

That’s said, there’s an interactive demo on the site, and the research paper is available here.

More on Google AI:

- Google’s AI-powered ‘Interview Warmup’ lets you practice answering job questions

- Google Translate adds support for 24 new languages, now supports over 130

- AI Test Kitchen puts the power of Google’s language processing in your hands

FTC: We use income earning auto affiliate links. More.

Comments