Proclaiming “the age of visual search is here” and that the “camera is the next keyboard,” Google at Search On 2022 announced a trio of new features and updates for Lens, like AR Translate.

Google Lens is now used 8 billion times a month, which is up from 3 billion per month in 2021.

Multisearch, which lets you take a picture and ask a question about it, is available in English globally since its beta launch in April. It’s now coming to over 70 languages in the next few months.

Meanwhile, the ability to add a “near me” location filter to a visual query is coming later this fall in the US. For example, Google imagines using Lens to discover what food dish you’re looking at and appending “near me” to find where it’s sold near you.

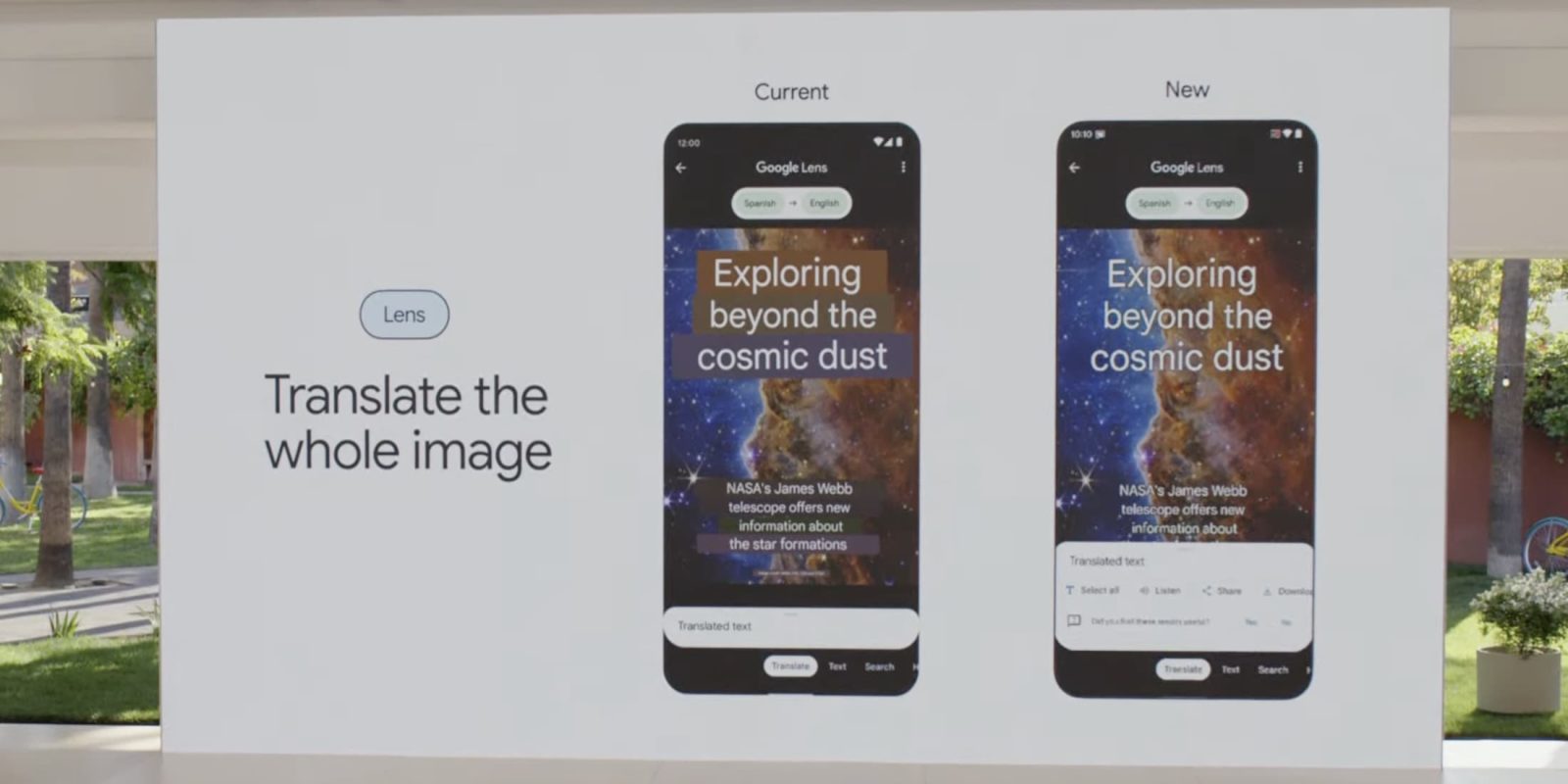

Lastly, the translate filter in Google Lens is now better at preserving the context of an image. At the moment, converted text is overlaid on top of the image with “color blocks” used to mask what’s being replaced.

Google Lens AR Translate now swaps out the original text outright. Google is using the same technology behind Magic Eraser to do this while the newly translated text matches the original style. This works in 100 milliseconds, both on screenshots and live in the Lens Camera. This is coming “later this year.”

More on Google Lens:

- Google Lens now lets you launch directly into a filter

- Lens for Android getting shortcut to launch Image Search

- Google previews iOS 16 Lock Screen widgets for Gmail, Maps, and more

FTC: We use income earning auto affiliate links. More.

Comments