The height of — what I deemed — an Assistant takeover was when “Google Assistant” got listed after Android in the “Operating System” section of the Pixel 3’s tech specs. A few years later, things have changed and the clear trend for Google Assistant in 2022 is one of retreat.

Assistant in 2022

This retrenchment was made clear this year after Assistant already had a quiet 2021 (as foundational advancements remain in the wings). The first warning sign was Google shutting down Assistant Snapshot, which had traces of what made Google Now so promising as a personalized, centralized feed that could have unsiloed information out of apps.

The second was the removal of Assistant’s Driving Mode “Dashboard” that — in May of 2019 — felt like a major coup by Assistant over Android (Auto). It took a long while to even launch and has since been discarded for a Google Maps-first experience.

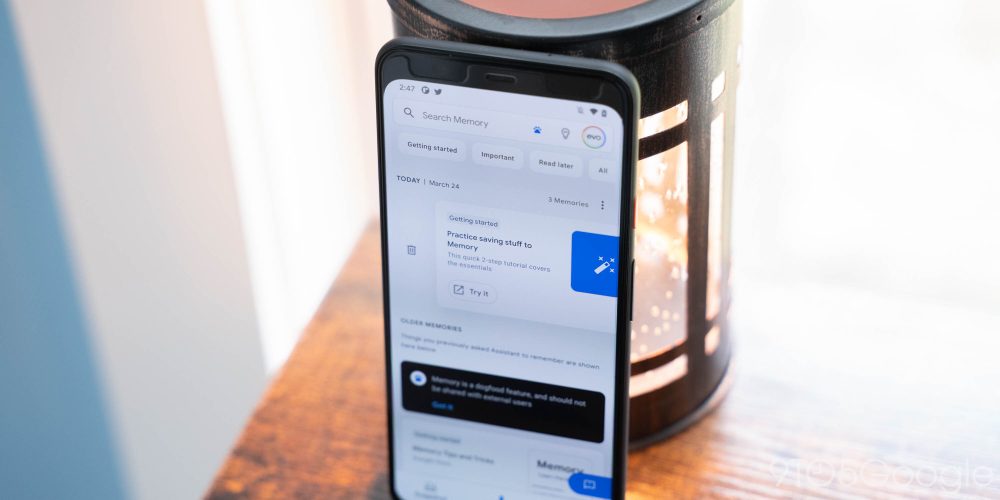

Lastly, after over a year of work, Google stopped development of Assistant Memory in June. It looked to supercharge saving content and reminders on Android. This was a good idea as the Google app’s Collections feature is underwhelming (with a poor UI) and would have been a welcome successor.

Besides all three being feeds, the important commonality among Dashboard, Snapshot, and Memories was how they were all attempts to extend Assistant from its voice roots. They were rife with Assistant branding, but ultimately had nothing to do with Assistant’s core and original competency. Their primary claim to being helpful was centralizing relevant information, but there’s nothing inherently Assistant about that.

Meanwhile, a report from The Information in October laid out how Google was going to “invest less in developing its Google Assistant voice-assisted search for cars and for devices not made by Google.” This was framed as focusing and improving the experience on first-party Google products, while it comes as the company is cost-cutting by consolidating various efforts.

The most significant result of this was the Sense 2 and Versa 4 not featuring Google Assistant even after it was offered on the previous generation of devices. That’s also the case on non-Pixel and Samsung wearables at launch. It’s wild that Wear OS 3 devices with only Amazon Alexa exist. Another “less important” area of investment was said to be Assistant for Chromebooks.

Assistant is no longer…

At its height, Assistant looked to be the connective tissue between all of Google’s form factors, if not the main way you interact with those devices. It being the primary interface on smart speakers and headphones was straightforward enough, but Google then tried to give Assistant UIs, starting with the Smart Display. That still felt like a good idea, but — in hindsight — Assistant replacing Android Auto for Phone Screens did not make a great deal of sense. Yes, voice input is a key part of getting help when driving, but Google could have left the touch-based UX to Android.

Another prime example of that is how the original Reminders experience, which is set to be replaced by Tasks with Assistant integration, had a horrible phone-based UI for years. Interacting by voice was fine, but it’s not clear if the Assistant ever had visual expertise, and it’s better to let existing teams continue that work.

At I/O 2019, when the new Google Assistant that worked on-device was also announced alongside Driving Mode, it seemed that the company wanted Assistant to be another way to control your phone. There are times when that is very helpful, but it will never be the primary interaction method.

Having lived with that next-gen Assistant for a few years now, which has yet to expand beyond Pixel phones, it has not changed the way I navigated my device, which was something Google pushed on-stage at one point. There’s a touchscreen for a reason and taps will likely be the fastest way of doing things for the foreseeable future (until smart glasses). Another reason could be the lack of buy-in from apps and letting them be controlled by voice.

If anything, the takeaway from on-device Assistant was how that work led to Assistant voice typing on Pixel phones, and how that technology is better applied to focused experiences, like transcription and editing in Gboard.

Where Assistant is going

Of the Assistant announcements Google made this year, the clear direction is improving the core voice experience. On the Nest Hub Max, you can now invoke the Assistant by just gazing at the Smart Display thanks to the camera-powered Look and Talk, while Quick Phrases let you skip “Hey Google” for pre-selected commands.

Top comment by Arthur

Google like many top tech companies have been looking at finance sheets more than before and I don't know if they've ever disclosed what Assistant has cost them to develop and maintain. The recent rumor that the Alexa business for Amazon has been multi-billion dollar endeavor that may be on the chopping block is pretty shocking. Most outsiders would look at the Alexa program as being pretty successful, it's really the only other viable virtual assistant. Cortana is really no more and Siri is nowhere near as complete as Assistant but I'm not sure how Google is monetizing Assistant and that could be the reason for stepping back on progress and narrowing its focus to maybe just first party devices.

At I/O 2022, Google also announced how Assistant in early 2023 will ignore “umm,” natural pauses, and self-corrections as you’re issuing a command. Like with the two new ways to activate Assistant, the goal is to make the experience more natural:

To make this happen, we’re building new, more powerful speech and language models that can understand the nuances of human speech — like when someone is pausing, but not finished speaking. And we’re getting closer to the fluidity of real-time conversation with the Tensor chip, which is custom-engineered to handle on-device machine learning tasks super fast.

Meanwhile, Google is using AI to improve the accuracy of Assistant smart home commands across three areas.

Natural language understanding is what drives Assistant. It’s gotten a great deal better in the past decade or so, and it’s easy to forget those advancements. Of course, that’s because our expectations are ever-growing and it seemed that the original Google Assistant was trying to do the same with its reach. There were of course improvements to the core voice experience as Assistant spread its tendrils across all of Google’s consumer-facing products, but this looks to be the case of trying to do too much, too fast.

Google’s forever challenge is taking R&D from the lab and giving it a good end user experience. Assistant can be at the forefront of that for Google, especially as new form factors arrive. However, trying to replace what works has not worked well, but the company is at least now finally aware of that.

FTC: We use income earning auto affiliate links. More.

Comments