Along with Maps Live View, Lens is Google’s most promising AR service, and it now has the ability to recognize skin conditions. Today, the company is also announcing other new generative AI features across Search and Shopping.

Google Lens

This new Google Lens capability will “search skin conditions that are visually similar to what you see on your skin.” You can crop into the affected portion, while results will appear in a carousel above “Visual matches.” The company notes, “Search results are informational only and not a diagnosis. Consult your medical authority for advice.”

Besides skin, it will also work with a “bump on your lip, a line on your nails, or hair loss on your head.” Google says it built this feature because “describing an odd mole or rash on your skin can be hard to do with words alone.”

Google Maps

Google previewed Glanceable Directions earlier this year to bring live trip progress directly to the directions/route overview screen and your lockscreen. You will get current ETAs, reroutes, and directions on where to turn without having to actually start navigation.

This information will also appear as lockscreen notifications on Android and Live Activities on iPhone. Glanceable Directions are rolling out globally starting this month for walking, cycling, and driving on Android and iOS.

Immersive View was announced at I/O 2022 to let you fly over a location you’re researching and see it in different conditions, including time of day and weather. It’s now coming to Amsterdam, Dublin, Florence, and Venice. Google is also expanding it to “over 500 iconic landmarks around the world, from Prague Castle to the Sydney Harbour Bridge.”

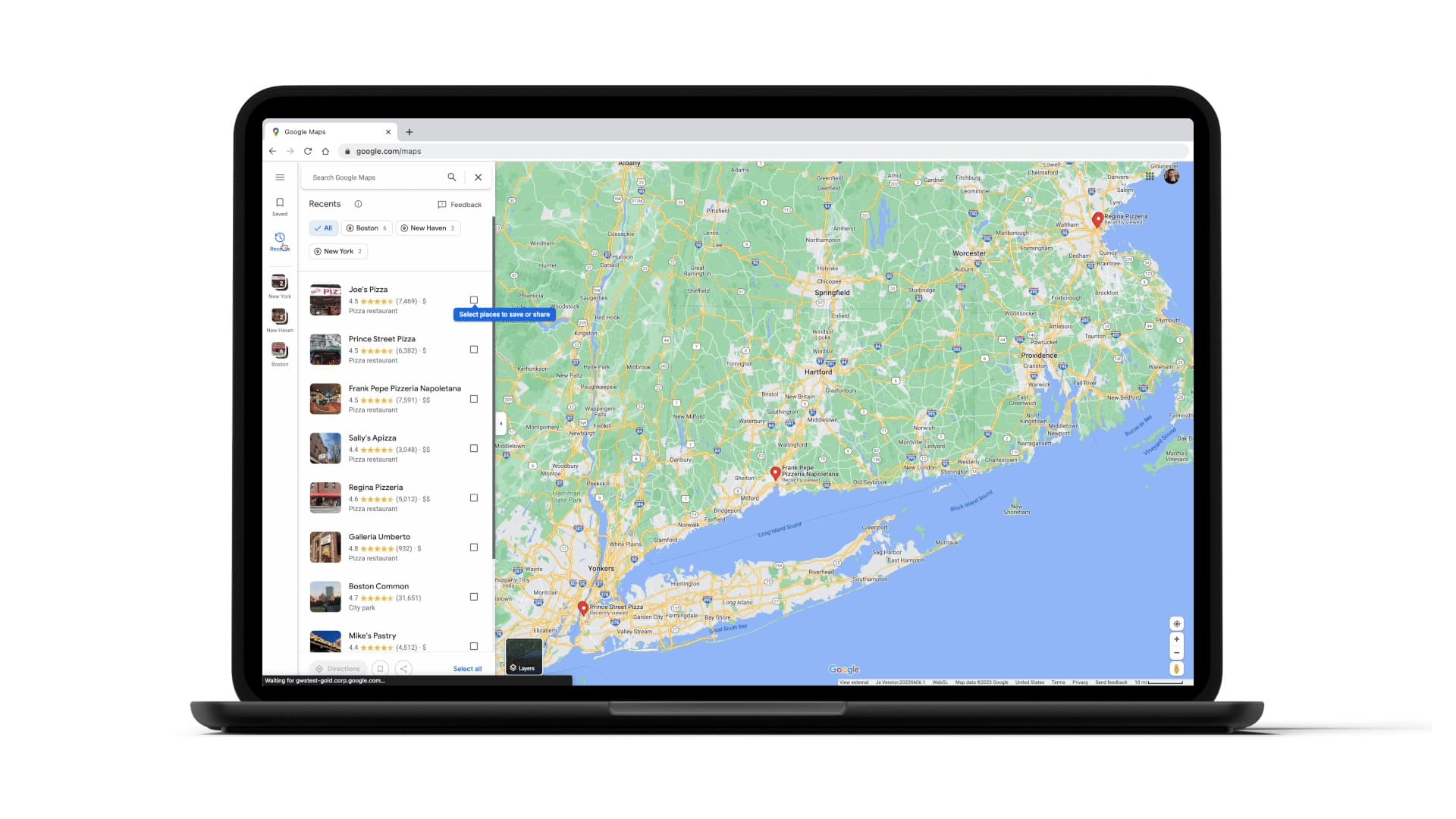

The Recents sidebar on Google Maps on the desktop will now save locations you’ve browsed through across browsing sessions rather than automatically clearing.

Google Search

Meanwhile, the Search Generative Experience (SGE) will now bring up an AI-powered snapshot when “you ask detailed questions about a place or destination,” like restaurants, hotels, and other attractions. This will surface information from the web, as well as user-submitted reviews, photos, and Business Profile details.

Shopping

On the Shopping front, Search is getting a virtual try-on tool that uses generative AI to predict how clothes will “drape, fold, cling, stretch, and form wrinkles and shadows on a diverse set of real models in various poses.” This can be achieved from “just one clothing image.”

We selected people ranging in sizes XXS-4XL representing different skin tones (using the Monk Skin Tone Scale as a guide), body shapes, ethnicities and hair types.

This is starting in the US for women’s tops from brands like Anthropologie, Everlane, H&M, and Loft. Look for the “Try On” badge and then select a “model that resonates most with you,” with support for men’s tops coming later this year.

Search is also adding a feature that lets you refine clothing by color, style, and pattern. Google is leveraging machine learning and “new visual matching algorithms” to do this across various retailers and stores.

FTC: We use income earning auto affiliate links. More.

Comments