Augmented reality glasses will fundamentally change how we use technology and our relationship to the internet. Facebook is vocal about its general plans, and Microsoft has HoloLens for enterprise, while the rumors around Apple’s offering is at an all-time high. On the hardware front, Google speculation is notably absent. The lack of AR glasses rumors is not yet disconcerting due to Google’s software work and acquisitions, but it could get so soon.

Why AR

AR overlays information on what you’re looking at in the real world. It’s a simple concept, but one that will usher in a radically different way of interacting with technology. Long before smartphones, you went to a specific place in your school or home to use a computer. The miniaturization of hardware and proliferation of cellular connectivity access in the early 2000s flipped that so technology follows you.

That said, there are similarities between using a phone and laptop/desktop. You’re still actively seeking out information, literally hunting and pecking for apps. Push alerts changed that dynamic in how you’re now being guided to an action, and AR will take that to a whole new level.

Notifications on AR glasses will have the potential to take over your entire vision in a very immediate manner. If not done tactfully, this could be disruptive and annoying but the immediacy provides some new opportunities.

Notifications

If you’re out in the world, mapping apps could alert you to a restaurant that fits your preferences. This is possible with a phone today, but an app sending you a push alert has to be very confident that you’d see it in time and not when you’re several blocks away. Meanwhile, a series of these alerts would be annoying if you need to pull out your phone every time one is sent. In contrast, it would be a low friction affair on glasses because the device is already out and on.

This new nature of alerts has huge ramifications for virtual assistants. Their proactive capabilities today are limited by users having to actively check a notification or open a feed/ inbox (Assistant Snapshot). Like with location, notifications sent by assistive services today have to be highly confident that an alert is actually useful. Down the road, AR-based reminders could be potentially triggered by things you see in the world.

In short, AR glass notifications will be high profile for how present and immediate they are but oddly more subtle.

HUDs and the everywhere workspace

Heads-up displays (HUDs) have historically been associated with airplanes, but they are increasingly coming to cars. Beyond AR supercharging notifications, glasses allow for an on-demand HUD. This will be useful when navigating through a city and having directions, like when to turn, overlaid right in front of you. AR will also be highly convenient for seeing instructions as you cook or performing a maintenance/assembly task. Meanwhile, imagine having your shopping list appear as you enter a store or pinning your to-dos for the day.

Once people are familiarized with floating displays and as the resolution improves, AR glasses will be used for work. The potential for virtual workspaces are tremendous. You could have an infinite number of floating screens to better multitask that can be used anywhere in a private manner.

Always-on everything

With smartphones today, turning off the screen means the end of what you’re doing. Once people get used to HUDs, they will want them to be always-on. That expectation of constant availability will extend to smart assistants and being able to ask for help at any time in the day wherever you are.

Today, despite the proliferation of AirPods, most people still do not talk to Siri or Google Assistant while they are out in the world due to social awkwardness. But there’s also an element of how it’s faster to look at a screen rather than listen to your virtual assistant read out a long message or notification. In AR having a screen, assistants become much more efficient and more likely to be used.

Besides screens and voice, AR will usher in the always-on camera. They might not initially be on all the time due to power constraints, but even in the first versions, people will find tremendous utility in having an always-ready camera that lets them quickly capture a first-person point of view. Further down the road, an always-on camera could see things that users miss and prompt them to action.

What Google AR has now

On the software side, Google is working on making possible many of the key experiences that people associate with AR. However, given the reality of what form factors are available today, the work is focused on smartphones.

Google has two signature AR experiences, starting with Maps. Live View provides walking directions by turning on your phone’s camera and showing giant floating arrows and pins on your screen. Under-the-hood, this works by Google using location and comparing what you’re currently seeing to its vast corpus of Street View data. The company calls this a Visual Positioning System that allows devices to pinpoint where you are in the world and what you’re looking at.

The next is Google Lens. Today, this visual search tool can identify text for seamless copying and translation, while object recognition recognizes plants, products, animals, and landmarks. A more advanced Maps integration lets you snap a menu to see recommended dishes and pictures from public reviews, while there’s a homework helper that hooks into Search.

Beyond features and tools, Google is subtly working to familiarize people with AR through the phenomenon that is 3D animals and other virtual objects in Search that can be placed in your environment. Google believes that the “easiest way to wrap your head around new information is to see it,” with this extending to education (models of the human body and cells) and commerce. You can already explore cars in Search, while a virtual showroom is more useful than just having to rely on pictures when buying given the state of the world today.

On the backend for developers, Google has persistent Cloud Anchors for creating AR layers over the real world, while their occlusion tech helps make virtual experiences more realistic. The company is also able to detect depth with a single camera.

What Google is missing for AR glasses

By being live today, these services are being actively used and optimized by millions of users. However, none of it matters unless it is miniaturized.

Speaking anecdotally to both tech enthusiasts and regular users, Google Lens is not widely used today. Instead of taking a picture to save information, users could use Lens to quickly copy the text they need and save it to their notes app. However, that’s just not an ingrained habit.

Holding up a phone to perform tasks inherently breaks you out of your current reality and forces you to focus on the new one that is the screen in front of you.

That would change when you’re physically wearing something meant to take pictures, and your AR homescreen is more or less a viewfinder. Such an interface trains you to snap pictures to get an analysis by Lens of the help that can be extracted. Google Lens could very well be the default action/tool of the first AR glasses.

Elsewhere, while AR navigation is already immensely helpful on a phone when navigating dense urban areas, glasses will provide a significantly more useful experience that is hands-free and can take over the entire screen to make sure you don’t miss a turn.

The true potential of augmented reality is realized on dedicated hardware. In its current state, AR tools are being adapted for a hardware form factor that is best suited for short interactions and much less immersive. It’s a disservice to the AR apps of today that limits people’s expectations for the future technology.

Hardware status

The very last public mention of what hardware development is being done by Google’s AR/VR division was in May of 2019 (via CNET) from Clay Bavor:

“On the hardware devices side, we’re much more in a mode of R&D and thoughtfully building the Lego bricks that we’re going to need in order to snap together and make some really compelling experiences.”

The VP went on to say that future devices would bring the smartphone’s “helpful experiences” to “newer and different form factors.”

Meanwhile, The Information in early 2020 reported that as of mid-2019 “former members of Google’s AR team said the company wasn’t working on a comparable effort [to Apple and Facebook’s rumored glasses].”

Since then, Google acquired smart glasses maker North. Known for Focals, the Canadian company was having funding issues while trying to release Focals 2.0. That project was canceled and employees were brought on to “join [Google’s] broader efforts to build helpful devices and services.” North joined Google’s hardware division rather than Google AR & VR, though it makes sense for any glasses to come under the “Made by Google” brand:

North’s technical expertise will help as we continue to invest in our hardware efforts and ambient computing future.

The extent of Google’s in-house miniaturization expertise today are the 2020 Pixel Buds. It’s definitely a start as voice will be one of the main ways to control input on smart glasses. Elsewhere, the Fitbit acquisition certainly increases the in-house wearable talent.

However, the real issue for AR today is the display technology. Chips are increasingly getting smaller and more power efficient, while on-device voice processing as seen by the new Google Assistant allows for faster interactions. The great bottleneck are screens that have a wide field of view while remaining close in size to regular glasses.

In all, there is not a very clear picture of what Google is doing in AR glasses. The talent and technology is certainly there – especially with North, but it still seems odd that Google did not have something already in-development before that.

Google Glass past

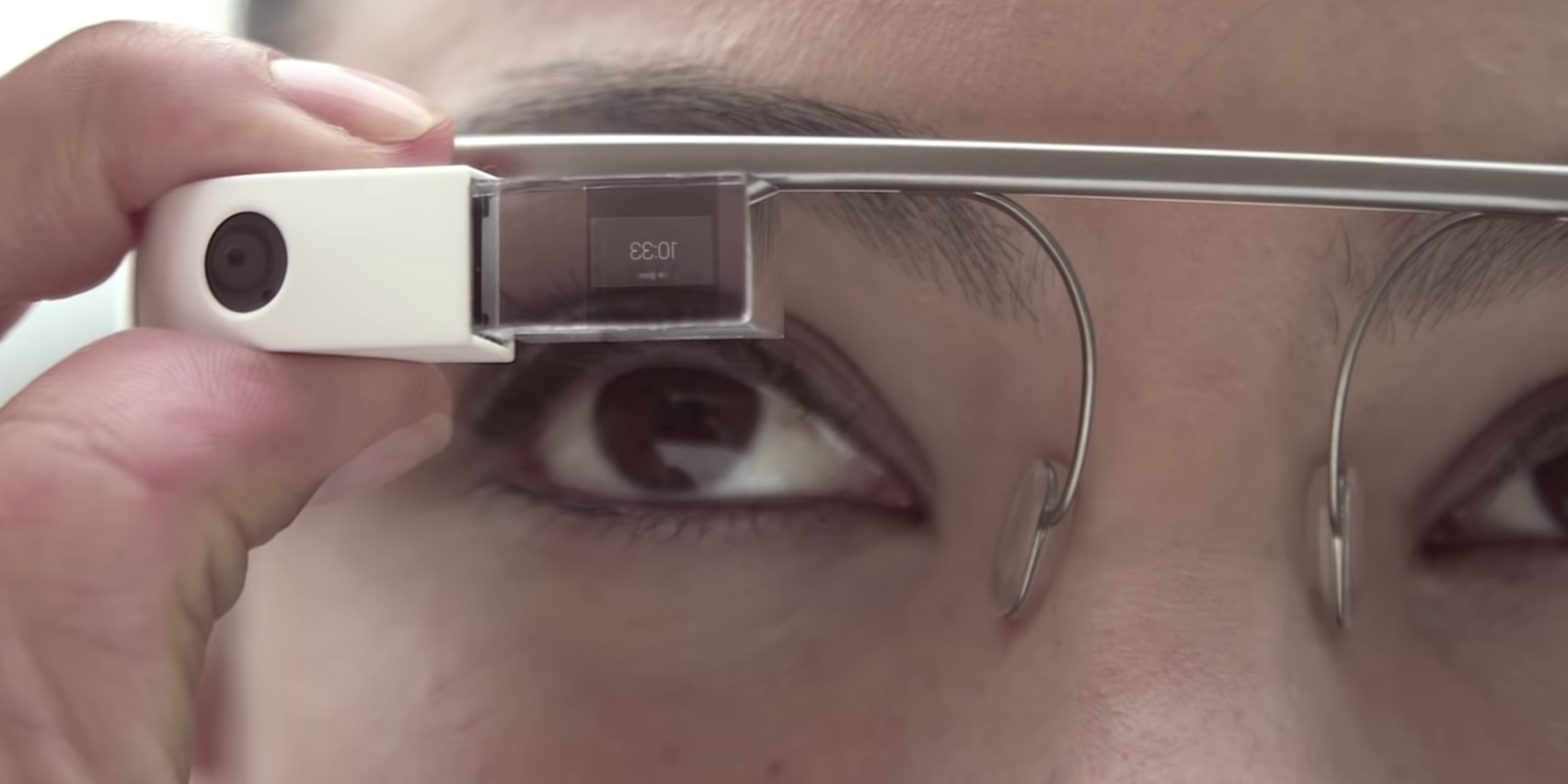

The elephant in the room is Google Glass. The same report from The Information last year described how “Google’s slowness on AR hardware was partly deliberate” due to that previous 2013-15 effort being so high-profile (including but not limited to barge watching) and having nothing to show for itself (beyond enterprise).

While that effort is widely perceived as a failure, I think Google made a mistake by shutting it down for consumer uses. Google Glass could only be loosely called AR as the device just offered a floating screen in the top-right corner of your vision. The device was not aware of your environment, and the technology was basically a dismantled smartwatch on your face in terms of utility.

That said, Google should have continued iterating in public. Glass was able to provide an early peak at how hands-free notifications could be incredibly useful. On more than a few occasions during this pandemic, I’ve found myself preferring to not take out my phone while in certain environments. I took to using Pixel Buds and Assistant for sending messages and hearing notifications, but having a screen for everyone of those interactions would have been infinitely better and faster. For example, being able to see a shopping list and navigating through voice would have been genuinely useful.

If Google continued developing that prism display technology, it could have gotten larger (to show more information) and might have sped up other screen research and investment. Future generations would have benefited from more efficient processors and in turn battery life.

Glass didn’t need to be a mass market product to be successful, though people would have eventually discovered its utility. There is great value alone in learning how people want to use this whole new form factor. Google would have been synonymous and adjacent with this up-and-coming technology.

In a way, Google shutting down Glass for a wider, non-enterprise audience reflects how Google was first to wrist-based wearables with Android Wear. However, it lacked the conviction to carry out that vision. We could very well be seeing a scenario where Apple ends up perfecting both the smartwatch and smart glasses, even though Google had entrants into the field first.

Consumer

Enterprise

In doing so, Google would be losing control and the ability to shape the future. The smartphone killed MP3 players, point and shoot cameras, and countless other single-purpose gadgets, while it does a pretty good job of replicating a laptop’s functionality. If there’s anything that could possibly replace that form factor as your daily driver and work device, it’s glasses.

Google’s success and market dominance today is born out of having the dominant smartphone OS, platform, and ecosystem today. If Google does not figure out smart glasses, it will very literally be missing from people’s vision.

Facebook, which lost mobile, is increasingly getting locked out by Apple on iOS. The social media giant is doing its hardest to not be frozen out again (and reduced to apps) by building the next hardware form factor and OS.

There is a potential future where Lens and Maps is available as a service on everyone’s AR glasses, but Google would be at the whim of OS vendors. Meanwhile, everyone else – after seeing how smartphones played out – wants end-to-end control of the experience and not let a large competitor have free reign over user interactions and data.

Augmented tea leaves

Instead of AR, when Google’s hardware division talks about technology of the future it consistently focuses on ambient computing:

We think technology can be even more useful when computing is anywhere you need it, always available to help you. Your devices fade into the background, working together with AI and software to assist you throughout your day. We call this ambient computing.

Hardware chief Rick Osterloh wrote the above to recap the company’s 2019 hardware lineup that October. He tellingly reiterated that in June of 2020 when announcing the North acquisition:

We’re building towards a future where helpfulness is all around you, where all your devices just work together and technology fades into the background. We call this ambient computing.

AR glasses is the form factor that Google can create to “assist you throughout your day,” and when done right “fades into the background.” Google might have learned with Glass to not rush into an early technology, but its history with nascent technology and hardware does not mean it gets the benefit of the doubt.

FTC: We use income earning auto affiliate links. More.

Comments